Generate RoadRunner Scene with Traffic Signs Using Recorded Sensor Data

This example shows how to generate a road scene containing traffic signs extracted from labeled camera and lidar sensor data.

In this example, you create a virtual scene from recorded sensor data that represents a real-world scene containing traffic signs, which you can use to perform verification of automated driving functionality. Camera images enable you to easily identify scene elements, such as traffic signs, while lidar point clouds enable you to accurately measure their distances and dimensions. Additionally, you can improve the labeling of traffic signs in images by using a variety of object detectors that perform reliably regardless of the camera used. Labeling traffic signs directly using only lidar point clouds is difficult, as the point clouds generally do not contain RGB information. Therefore, this example uses traffic signs labeled in camera frames and leverages lidar data to get accurate depth and dimension measurements. Combining this extracted information, you can create a high-definition scene in RoadRunner that contains traffic signs.

In this example, you:

Load, explore, and visualize the recorded camera and the lidar data.

Extract the location, dimension, and orientation information of the traffic signs.

Estimate the traffic signpost information based on the extracted traffic sign information.

Create a RoadRunner scene.

Build and view the RoadRunner scene in RoadRunner Scene Builder. You can also export the scene to ASAM OpenDRIVE®.

Load Sensor Data

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed, and, If not, installed, install it using Add-On Explorer. for more information, see Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing a subset of sensor data from the PandaSet data set, and then unzip the file. The file contains this data:

A continuous sequence of 80 time-synchronized point clouds and images.

Traffic sign annotations in pixel coordinates. If your data does not have annotations, you can annotate the images by using a detector, or you can label them by using the Ground Truth Labeler app.

The camera sensor pose in world coordinates.

The lidar sensor pose in world coordinates.

GPS data, time synchronized with the point clouds and images.

dataFolder = tempdir; sensorDataFileName = "PandasetTrafficSignsData.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + sensorDataFileName; filePath = fullfile(dataFolder,sensorDataFileName); if ~isfile(filePath) websave(filePath,url) end unzip(filePath,dataFolder) dataset = fullfile(dataFolder,"PandasetTrafficSignsData");

Get the lidar data, camera detections data, pose of the camera sensor with respect to the lidar sensor, camera intrinsic parameters, and GPS data from the raw PandaSet sensor data by using the helperLoadPandasetTrafficSignsData helper function.

sensorData = helperLoadPandasetTrafficSignsData(dataset);

Load the lidar data into the workspace.

lidarData = sensorData.lidarData;

lidarData is a table with these columns:

timestamp— Time, in seconds, at which the lidar point cloud data was collected.filename— Filename of the lidar point cloud data in PCD format.position— Position of the lidar sensor in world coordinates. Units are in meters.orientation— Orientation of the lidar sensor specified as a quaternion, in world coordinates.

If your data does not contain the position and orientation information of the lidar sensor, you can estimate those by registering the point clouds. For more information about point cloud registration, see the Build a Map from Lidar Data example.

Display the first five entries of lidarData.

lidarData(1:5,:)

ans=5×4 table

timestamp filename position heading

__________ __________________ _________________________________ _________________________________________________

1.5576e+09 "113\lidar\00.pcd" 0 0 0 0.90784 -0.014844 -0.0052725 0.41902

1.5576e+09 "113\lidar\01.pcd" -1.2433 1.0439 -0.037975 0.90806 -0.013039 -0.0062879 0.4186

1.5576e+09 "113\lidar\02.pcd" -2.4896 2.0884 -0.080064 0.90828 -0.010271 -0.005579 0.4182

1.5576e+09 "113\lidar\03.pcd" -3.7343 3.1347 -0.1191 0.90845 -0.0086564 -0.0051139 0.41787

1.5576e+09 "113\lidar\04.pcd" -4.9752 4.1805 -0.137 0.90849 -0.0085758 -0.0058968 0.41778

Create a CombinedDatastore object to store the lidar data.

% Append the source directory with the filenames to obtain a full path to the files. lidarData.filename = fullfile(dataset,lidarData.filename); % Create a combined datastore. cdsLidar = helperCreateCombinedDatastoreLidar(lidarData);

The point clouds in lidarData are in world coordinates, which you must transform into the lidar sensor frame. For more information on world and sensor coordinate frames, see Coordinate Systems in Automated Driving Toolbox. According to the sensor coordinate system, the positive x-axis must point forward from the ego vehicle, and the positive y-axis must point to the left of the ego vehicle for each point cloud. However, for the PandaSet data set, in the lidar sensor frame, the ego vehicle faces toward the positive y-axis and the left of the ego vehicle is along the negative x-axis. Thus, you must apply a rotation of –90 degrees around the positive z-axis.

Transform the point clouds from the world coordinate system to the lidar sensor frame by using the helperTransformPointCloudFcn helper function.

% Specify true if the point clouds are in world coordinates. Otherwise, specify false. isWorldFrame = true; % Specify the rotation around the x-, y-, and z-axes, in degrees, to make the % lidar sensor frame follow the Automated Driving Toolbox coordinate % system convention. rotationInLidarFrame = [0 0 -90]; % Create a transformed datastore. cdsLidar = transform(cdsLidar,@(data) helperTransformPointCloudFcn(data,isWorldFrame,rotationInLidarFrame));

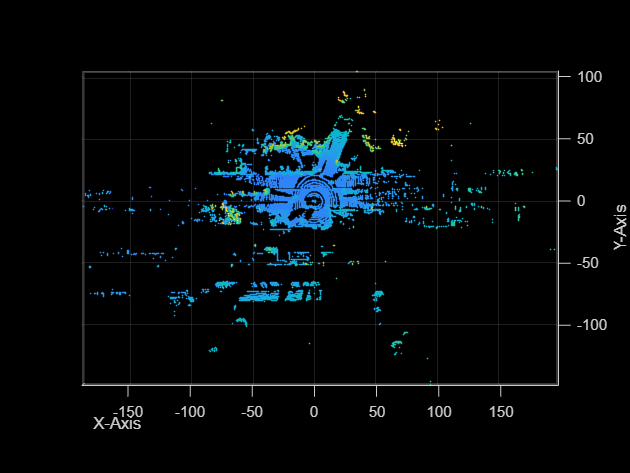

Visualize the data of the first point cloud from the transformed lidar data.

In this figure, the ego vehicle is present at (0, 0) in xy-coordinates. The positive x-axis points forward from the ego vehicle and the positive y-axis points to the left of the ego vehicle.

pcData = read(subset(cdsLidar,1));

pcLidar = pcData{1};

ax = pcshow(pcLidar);

view(ax,2)

xlabel("X-Axis")

ylabel("Y-Axis")

Load the camera data into the workspace.

cameraDetections = sensorData.cameraDetections;

cameraDetections is a table with these columns:

timestamp— Time, in seconds, at which the image frame was captured.filename— Camera image filename.boxes— Bounding boxes corresponding to the traffic signs.labels— Class labels corresponding to the bounding boxes.

The first detected traffic sign bounding boxes in cameraDetections are in frame 33. Display five entries of cameraDetections, starting from the 33rd frame.

cameraDetections(33:37,:)

ans=5×4 table

timestamp filename boxes labels

__________ ________________________________ __________________ __________________________

1.5576e+09 "113\camera\front_camera\32.jpg" {[1255 399 42 43]} {[yieldHereToPedestrians]}

1.5576e+09 "113\camera\front_camera\33.jpg" {[1269 400 45 45]} {[yieldHereToPedestrians]}

1.5576e+09 "113\camera\front_camera\34.jpg" {[1285 397 47 47]} {[yieldHereToPedestrians]}

1.5576e+09 "113\camera\front_camera\35.jpg" {[1301 384 50 50]} {[yieldHereToPedestrians]}

1.5576e+09 "113\camera\front_camera\36.jpg" {[1320 375 53 51]} {[yieldHereToPedestrians]}

Create a combinedDatastore object to store the camera detection data.

% Append source directory with the filenames to obtain a full path to the files. cameraDetections.filename = fullfile(dataset,cameraDetections.filename); % Create a combined datastore. cdsCamera = helperCreateCombinedDatastoreCamera(cameraDetections);

Estimate Traffic Signs

cameraDetections contains traffic sign detections in pixel coordinates because it is easier to detect and annotate those in camera images. To import these detections into RoadRunner, you must estimate their corresponding position, dimension, and orientation cuboid parameters in world coordinates. To accurately estimate of these cuboid parameters, you must use lidar point cloud data.

This example uses this lidar and camera information to transform traffic sign detections in pixel coordinates into cuboids in world coordinates.

Time-synchronized point cloud data and camera images.

Camera intrinsic parameters.

Pose of the lidar sensor in world coordinates.

Pose of the camera sensor with respect to the lidar sensor.

Load the camera intrinsic parameters into the workspace.

cameraParameters = sensorData.cameraParameters;

Create a cameraIntrinsics object to store the intrinsic camera parameters. If you do not know your camera intrinsic parameters, you can estimate them. For more information about estimating camera parameters, see Calibrate a Monocular Camera. You can also use the estimateMonoCameraFromScene function to estimate approximate camera parameters directly from a camera image.

intrinsics = cameraIntrinsics(cameraParameters.focalLength,cameraParameters.principalPoint,cameraParameters.imageSize)

intrinsics =

cameraIntrinsics with properties:

FocalLength: [1.9700e+03 1.9700e+03]

PrincipalPoint: [970.0002 483.2988]

ImageSize: [1080 1920]

RadialDistortion: [0 0]

TangentialDistortion: [0 0]

Skew: 0

K: [3×3 double]

Load the pose of the camera sensor with respect to the lidar sensor. If you do not know this pose information, see the Lidar and Camera Calibration (Lidar Toolbox) example.

cameraToLidarPose = sensorData.cameraToLidarPose;

Because you have transformed the lidar data into the lidar sensor frame, you must apply the same transformation to the pose of the camera sensor with respect to the lidar sensor.

Transform the pose of the camera sensor to the lidar sensor frame by using the helperTransformCameraToLidarPose helper function.

cameraToLidarPose = helperTransformCameraToLidarPose(cameraToLidarPose,rotationInLidarFrame)

cameraToLidarPose=80×1 struct array with fields:

position

heading

Estimate cuboids for traffic signs by using the helperEstimateSignCuboids helper function. This helper function also returns a label and a lidar frame index for each estimated cuboid. Specify minimum and maximum aspect ratio threshold values for traffic sign cuboids to filter invalid estimations. The aspect ratio of a traffic sign cuboid is the ratio of its height to its width. The helper function considers cuboids with an aspect ratio within the specified threshold range valid.

asprThreshold = [0.5 2]; cuboidSigns = helperEstimateSignCuboids(cdsCamera,cdsLidar,intrinsics,cameraToLidarPose,asprThreshold)

cuboidSigns = struct with fields:

cuboids: [63×9 double]

labels: [63×1 categorical]

frameIndices: [63×1 double]

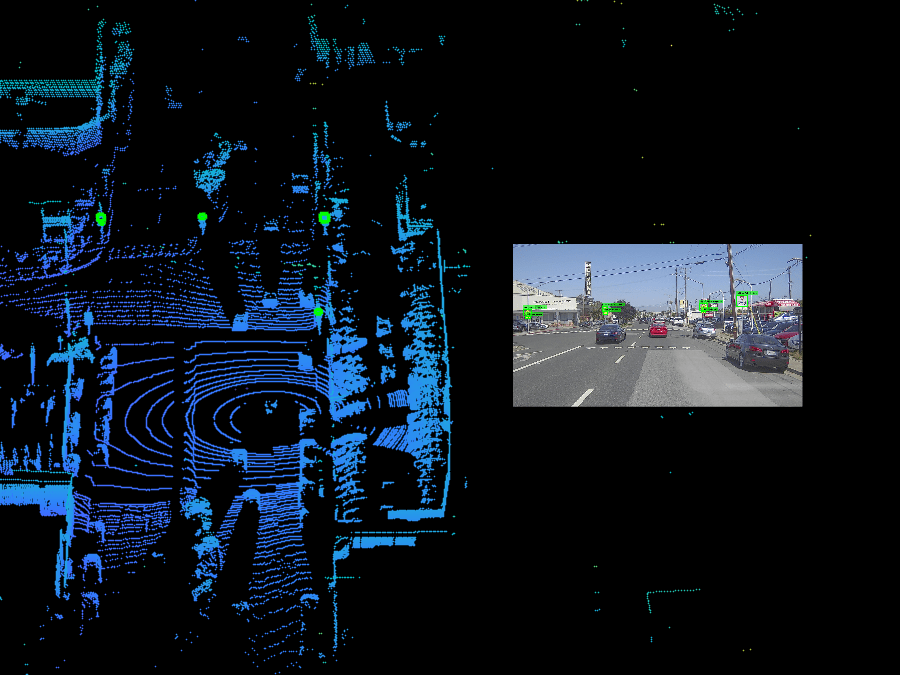

Visualize the 43rd image frame, with traffic sign detections, and its respective lidar point cloud data, with estimated cuboids.

idx = 43; helperVisualizePointCloudAndImage(cdsLidar,cdsCamera,cuboidSigns,idx)

Because you can detect the same traffic sign in multiple image frames, you might have obtained multiple cuboids corresponding to a single traffic sign. Remove those duplicate cuboids by using the helperRemoveDuplicateSigns helper function. This helper function uses distance-based clustering to remove redundancy.

distanceThreshold = 1.5; % Units are in meters

cuboidSigns = helperRemoveDuplicateSigns(cuboidSigns,distanceThreshold)cuboidSigns = struct with fields:

cuboids: [7×9 double]

labels: [7×1 categorical]

frameIndices: [7×1 double]

Sometimes, two or more traffic signs can be stacked on a single signpost. Use the helperGroupStackedSigns helper function to identify and group such cuboids in cell arrays.

cuboidSigns = helperGroupStackedSigns(cuboidSigns)

cuboidSigns = struct with fields:

cuboids: {4×1 cell}

labels: {4×1 cell}

frameIndices: [4×1 double]

Estimate Traffic Signposts

The detections in the camera image frame contain only traffic sign information. To create a scene with traffic signs, you must also specify traffic signposts, the structures that hold the traffic signs. These are typically poles. In this section, you estimate cuboids that store information regarding the positions, dimensions, and orientations of traffic signposts in world coordinates. You can perform these estimations by using the cuboid parameters specific to each traffic sign, along with the corresponding lidar point cloud data and the associated pose of the lidar sensor in world coordinates.

Estimate the cuboids for traffic signposts by using the helperEstimateSignPostCuboids helper function.

cuboidSignPosts = helperEstimateSignPostCuboids(cuboidSigns,cdsLidar);

Create RoadRunner HD Map

Arrange the obtained cuboids for traffic signs and signposts in world coordinates by using the helperArrangeCuboids helper function. The returned table has these two columns.

label— Label of the cuboids. For traffic signs, this is the respective class label, and for traffic sign posts, this is"signPosts".cuboids— Cuboids in world coordinates in the form [xctr,yctr,zctr,xlen,ylen,zlen,xrot,yrot,zrot]. Thexctr,yctr, andzctrvalues specify the center coordinates of the cuboid. Thexlen,ylen, andzlenvalues specify the length of the cuboid, in meters, along the x-, y-, and z-axes, respectively. Thexrot,yrot, andzrotvalues specify the rotation angles, in degrees, for the cuboid along the x-, y-, and z-axes, respectively. These angles are clockwise-positive when looking in the forward direction of their corresponding axes.

trafficSignCuboids = helperArrangeCuboids(cuboidSigns,cuboidSignPosts)

trafficSignCuboids=5×2 table

label cuboids

_________________________ _____________________________________________________________

"leftDiagonalArrow" {[-83.2972 78.4752 1.0065 0.0848 0.5525 0.3125 0 0 318.0693]}

"pedestrianCrossingAhead" {3×9 double }

"rightDiagonalArrow" {2×9 double }

"yieldHereToPedestrians" {[-64.5882 61.7578 1.6152 0.1285 0.7594 0.6695 0 0 324.6320]}

"signPosts" {4×9 double }

Create an empty RoadRunner HD Map.

rrMap = roadrunnerHDMap;

This example provides a prebuilt RoadRunner HD Map for the roads for better visualization of the generated scene with traffic signs. If you do not have roads for your data, you can build a scene by using the Generate RoadRunner Scene Using Labeled Camera Images and Raw Lidar Data example. Add road data to the RoadRunner HD Map rrMap.

read(rrMap,"mapWithRoads.rrhd")Load the GPS data, which is time synchronized with the camera and lidar data into the workspace.

gpsData = sensorData.gpsData;

Set the geographic reference of the map to the start of the sequence. If you do not have GPS data, specify the geographic reference as [0 0].

rrMap.GeoReference = [gpsData.latitude(1) gpsData.longitude(1)];

To import traffic signs and signposts into the RoadRunner HD Map, you must generate their static object information in the roadrunner.hdmap.Sign and roadrunner.hdmap.StaticObject formats, respectively. Use the roadrunnerStaticObjectInfo function to generate static object information for traffic signs and signposts.

Store the cuboid parameters for each label of the traffic signs and signposts in a structure.

Map each label in

trafficSignCuboidsto a sign type supported by theroadrunnerStaticObjectInfofunction.If any traffic sign label does not match with the list of supported traffic signs in

roadrunnerStaticObjectInfo, then you can store the cuboid parameters for that label in a field namedmiscellaneousSign, and specify a RoadRunner asset for that traffic sign by using theParamsname-value argument.

Specify your mapping for the traffic sign labels in the helperMapLabelsToRoadRunnerSignType helper function.

staticObjects = helperMapLabelsToRoadRunnerSignTypes(trafficSignCuboids);

Generate static object information in the RoadRunner HD Map format for the specified traffic signs and signposts.

objectsInfo = roadrunnerStaticObjectInfo(staticObjects);

Update the map with static object information.

rrMap.SignTypes = objectsInfo.signTypes; rrMap.Signs = objectsInfo.signs; rrMap.StaticObjectTypes = objectsInfo.staticObjectTypes; rrMap.StaticObjects = objectsInfo.staticObjects;

Write the RoadRunner HD Map to a binary file, which you can import into the Scene Builder Tool.

write(rrMap,"TrafficSignScene.rrhd")Build RoadRunner Scene

Open the RoadRunner application by using the roadrunner (RoadRunner) object. Import the RoadRunner HD Map data from your binary file, and build it in the currently open scene.

Specify the path to your local RoadRunner installation folder. This code shows the path for the default installation location on Windows®.

rrAppPath = "C:\Program Files\RoadRunner R2023a\bin\win64";Specify the path to your RoadRunner project. This code shows the path to a sample project folder on Windows.

rrProjectPath = "C:\RR\MyProject";Open RoadRunner using the specified path to your project.

rrApp = roadrunner(rrProjectPath,InstallationFolder=rrAppPath);

Copy the TrafficSignScene.rrhd scene file into the asset folder of the RoadRunner project, and import it into RoadRunner.

copyfile("TrafficSignScene.rrhd",(rrProjectPath + filesep + "Assets")) file = fullfile(rrProjectPath,"Assets","TrafficSignScene.rrhd"); % Set the world origin of the RoadRunner scene to the natural origin of the % RoadRunner HD Map. geoRef = [rrMap.readCRS().ProjectionParameters.LatitudeOfNaturalOrigin rrMap.readCRS().ProjectionParameters.LongitudeOfNaturalOrigin]; changeWorldSettings(rrApp,WorldOrigin=geoRef) % Import the RoadRunner HD Map into RoadRunner. importScene(rrApp,file,"RoadRunner HD Map")

This figure shows an image frame and its scene built using the Scene Builder Tool. Observe the traffic signs attached to signposts in the built scene.

Note: Use of the Scene Builder Tool requires a RoadRunner Scene Builder license, and use of RoadRunner assets requires a RoadRunner Asset Library Add-On license.

Helper Functions

helperCreateCombinedDatastoreLidar — Creates a combined datastore for storing lidar point clouds and lidar sensor pose information.

function cdsLidar = helperCreateCombinedDatastoreLidar(lidarData) pcds = fileDatastore(lidarData.filename,"ReadFcn",@pcread); lidarPoses = rigidtform3d.empty; for i = 1:size(lidarData,1) rot = quat2rotm(quaternion(lidarData.heading(i,:))); tran = lidarData.position(i,:); pose = rigidtform3d(rot,tran); lidarPoses(i,1) = pose; end poses = arrayDatastore(lidarPoses); cdsLidar = combine(pcds,poses); end

helperCreateCombinedDatastoreCamera — Creates a combined datastore for storing camera images and associated detection information.

function cdsCamera = helperCreateCombinedDatastoreCamera(cameraData) imds = imageDatastore(cameraData.filename); blds = boxLabelDatastore(cameraData(:,3:end)); cdsCamera = combine(imds,blds); end

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

See Also

Functions

roadrunnerLaneInfo|roadrunnerStaticObjectInfo|getMapROI|roadprops|selectActorRoads|updateLaneSpec|actorprops

Objects

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Generate RoadRunner Scene with Trees and Buildings Using Recorded Lidar Data

- Generate RoadRunner Scene Using Processed Camera Data and GPS Data

- Generate RoadRunner Scene Using Labeled Camera Images and Raw Lidar Data

- Generate RoadRunner Scene from Recorded Lidar Data

- Generate RoadRunner Scenario from Recorded Sensor Data

- Extract Lane Information from Recorded Camera Data for Scene Generation

- Extract Vehicle Track List from Recorded Camera Data for Scenario Generation