Seeing the Road Ahead

The Path Toward Fully Autonomous, Self-Driving Cars

MATLAB and Simulink for Startups

More than 7,000 startups just like this one are accessing low-cost tools plus MathWorks engineering support and discounted training.

At the 1939 New York World’s Fair, General Motors unveiled its vision of a future world that supported smart highways and self-driving cars. Although that dream has yet to emerge some 80 years later, autonomous car technology has advanced considerably. Networks of sensors—including cameras that read road and traffic signs, ultrasonics that sense nearby curbs, laser-based lidar for seeing 200 meters out or more, and radar that measures range and velocity—are being developed to assist drivers. Paired with artificial intelligence, these technologies help drivers park, back up, brake, accelerate, and steer; detect lane boundaries; and even prevent sleepy motorists from drifting off behind the wheel.

Nearly 36,000 people in the United States died in traffic accidents in 2018—with more than 90% of those accidents caused by human error.

Although these advances have not yet completely replaced a human in the driver’s seat, doing so could save lives. According to the latest numbers from the National Highway Traffic Safety Administration, nearly 36,000 people in the United States died in traffic accidents in 2018—with more than 90% of those accidents caused by human error. Pedestrian fatalities have risen by 35% in the past decade, reaching more than 6,000 per year. Vehicle perception technology that could “see” its surroundings better than a human and react more quickly could significantly reduce injuries and deaths.

While there is agreement that perception technology will surpass human ability to see and sense the driving environment, that’s where the agreement ends. The automotive industry has not yet reached consensus on a single technology that will lead us into the era of driverless cars. In fact, the solution will likely require more than one. Here are three technology companies advancing vehicle perception to usher in a future of fully autonomous, self-driving cars.

“We’re focused on long range and high resolution, which is the very hardest problem in automotive radar.”

Beamsteering Radar

Since the early part of the 20th century, radar has been used to help ships and planes navigate. Its ability to detect and identify targets and provide accurate velocity information under complicated conditions makes it ideal for autonomous driving.

Engineers at California-based Metawave are pushing the limits of radar to recognize other autos, pedestrians, stationary surroundings, road hazards, and more in all weather conditions and in the dark of night. Its analog radar platform, called SPEKTRA™, forms a narrow beam and steers it to detect and classify objects within milliseconds. Abdullah Zaidi, director of engineering at Metawave, says their technology is the highest-resolution analog radar in the automotive sector. It can see pedestrians 250 meters away and recognize vehicles 330 meters away.

It can also accurately measure small distances between two objects, called angular resolution, which gives the radar the ability to distinguish one object from another. “This is not something that current radars can do,” says Zaidi.

Metawave leverages machine learning and AI to build an analog beamsteering radar system. Image Credit: Metawave Corp.

The way SPEKTRA scans the environment is also different. Unlike conventional digital radar systems that capture all the information at once, analogous to a powerful flashbulb illuminating a scene, Metawave’s radar works more like a laser beam able to see one specific section of space at a time. The beam rapidly sweeps the environment, detecting and classifying all the objects in the vehicle’s field of view within milliseconds. Metawave’s approach increases range and accuracy while reducing interference and the probability of clutter, all with very little computational overhead. “We’re focused on long range and high resolution, which is the hardest problem to solve in automotive radar today,” says Zaidi.

Metawave engineers use MATLAB® to test the range and resolution of the SPEKTRA radar and create the underlying algorithms that process the radar outputs. The technology gives cars self-driving features such as left-turn assist, blind-spot monitoring, automatic emergency braking, adaptive cruise control, and lane assist.

Smarter Lidar

Some of the first self-driving cars, which were developed as part of a competition sponsored by the U.S. government’s Defense Advanced Research Projects Agency (DARPA), used laser-based systems to “see” the environment. The light detection and ranging (lidar) sensing system emits thousands of pulses of light every second that bounce off surrounding objects and reflect back to the vehicle. There, a computer uses each data point, called a voxel, to reconstruct a three-dimensional image of the environment and ultimately control how the car moves.

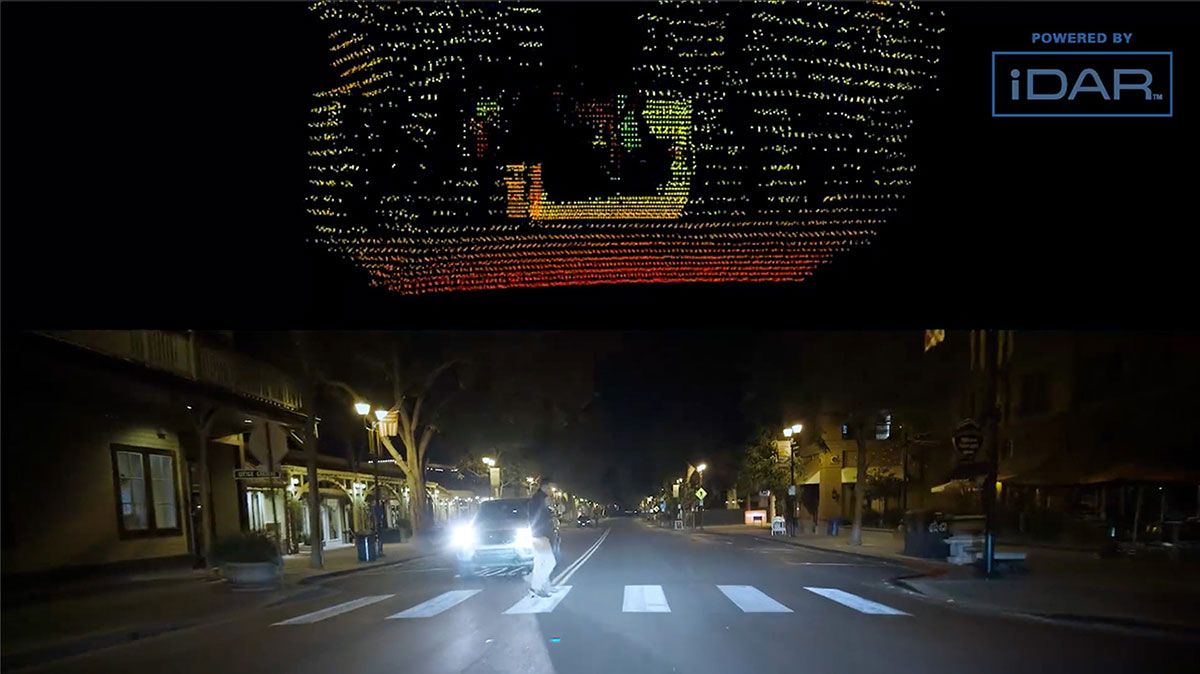

iDAR brings powerful sensing capabilities found in the aerospace and defense industries to the automotive market. Image Credit: AEye, Inc.

Lidar is expensive, though, costing more than $70,000 per vehicle. And using it alone has its limitations. Inclement weather interferes with the signal, so it’s often combined with other sensing technologies, such as cameras, radar, or ultrasonics. But that can produce an overwhelming amount of redundant and irrelevant information that a central computer must parse, says Barry Behnken, cofounder and senior vice president of AEye, based in Dublin, California.

“Our ultimate goal is to develop a perception system that’s as good as or better than a human’s.”

Engineers there have advanced lidar’s capabilities by fusing it with a high-resolution video camera. Their system, called iDAR, for Intelligent Detection and Ranging, creates a new type of data point that merges the high-resolution pixels from a digital camera with the 3D voxels of lidar. They call these points Dynamic Vixels. Because the laser pulses and video camera gather optical information through the same aperture, the data stream is integrated and can be analyzed at the same time, which saves time and processing power.

Unlike traditional lidar systems, which scan a scene equally across the whole environment, iDAR adjusts its light-pulsing patterns to give key areas of the scene more attention. Where to direct the pulses is determined by AEye’s computer vision algorithms. They first analyze the camera data to search for and detect the edges of objects and then immediately zero in with the higher-resolution lidar scans to classify, track, and predict the motion of those objects. Engineers use MATLAB to ensure that the algorithms are scanning the scene using the best, most efficient light-pulsing pattern possible.

“We’re trying to do as much perception on the sensor side to reduce the load on the vehicle’s central compute side,” says Behnken. Capturing better information faster leads to more accurate perception, while using less laser power than conventional solutions, he says. “Our ultimate goal is to develop a perception system that’s as good as or better than a human’s,” he says.

By selectively allocating additional lidar shots around objects in motion, iDAR is able to classify those objects and calculate direction and velocity. Image Credit: AEye, Inc.

Heat Waves

Advances in lidar, radar, and video camera technology will help move autonomous driving technology into the future. But no sensor can accomplish the job alone. “They all have their strengths and they all have their weaknesses,” says Gene Petilli, vice president and chief technical officer at Owl Autonomous Imaging, based in Fairport, New York.

Conventional lidar is extremely accurate, but snow, rain, and fog reduce its ability to tell animate from inanimate objects, says Petilli. Traditional radar, on the other hand, can see through the snow, is excellent at long distances, and can judge the relative speed of objects, but it alone cannot distinguish what those objects are. Cameras can classify as well as read traffic lights and street signs, but glare can disrupt the quality, and at night, they can only see what the headlights illuminate.

Thermal imaging from a prototype Owl AI. Watch a full video of the prototype system. Video Credit: Owl Autonomous Imaging

“Autonomous vehicles won’t be accepted by the public until they are safer than a human driver.”

“The trick is to pick a suite of sensors that don’t have the same weaknesses,” says Petilli.

Owl AI’s team fills in the gaps with 3D thermal imaging, which senses heat signatures given off by people and animals, and greatly simplifies object classification. Called Thermal Ranging™, the company’s sensor is a passive system—meaning it doesn’t have to emit energy or light and wait until it bounces back—that can pick up the infrared heat of a living object. It sees the object, whether it’s moving or stationary, in day or night and in any weather conditions, up to 400 meters away, and can calculate the object 3D range and velocity up to 100 meters away.

The device is made of a main lens, similar to that found in a regular camera, plus an array of very small lenses positioned between the main lens and a detector. The array breaks the scene into a mosaic of images, each one looking at the object of interest from a different angle. An algorithm measures the subtle differences between the images to calculate how far away the object is.

Petilli says the company is using MATLAB to perfect the system. Because they’re trying to measure very small differences between elements in the microlens array, any distortion in the lens can create errors in the range calculation. So, they model the entire system in MATLAB to perfect the algorithms that correct for the lens distortion. They also run driving simulations to train the deep neural network AI algorithm that creates the 3D thermal images. Deep learning will be used to evaluate neural network algorithms to convert the mosaic of images into a 3D map.

“Autonomous vehicles won’t be accepted by the public until they are safer than a human driver,” says Petilli.

Enhancing Safety

Vehicle perception technologies are key to providing a safe automated driving experience. To deliver on the promise of fully autonomous, self-driving cars, tech companies are using AI and computer vision to help vehicles see and sense their environment. And although fully autonomous cars aren’t the norm yet, these companies are bringing us closer while improving the safety systems in new cars today.

MATLAB and Simulink for Startups

More than 7,000 startups just like this one are accessing low-cost tools plus MathWorks engineering support and discounted training.