tunefisOptions

Option set for tunefis function

Description

Use a tunefisOptions object to specify options for tuning fuzzy

systems using the tunefis function. You can specify options

such as the optimization method, optimization type, and distance metric for optimization cost

calculation.

Creation

Description

opt = tunefisOptionstunefis function. To modify the properties of this option set for your

specific application, use dot notation.

opt = tunefisOptions(PropertyName=Value)

Properties

Tuning algorithm, specified as one of these values:

"ga"— genetic algorithm"particleswarm"— particle swarm"patternsearch"— pattern search"simulannealbnd"— simulated annealing algorithm"anfis"— adaptive neuro-fuzzy

These tuning algorithms use solvers from the Global Optimization Toolbox software, except for "anfis". The

MethodOptions property differs for each algorithm, and

corresponds to the options input argument for the respective solver. If you specify

MethodOptions without specifying Method, then

the tuning method is determined based on MethodOptions.

The "anfis" tuning method supports tuning only type-1 Sugeno

fuzzy inference systems with one output variable.

Tuning algorithm options, specified as an option object for the tuning algorithm

specified by Method. This property differs for each algorithm and

is created using optimoptions. If you do not specify

MethodOptions, tunefis creates a default

option object for the tuning method specified in Method. To modify

the options in MethodOptions, use dot notation.

Type of optimization, specified as one of the following:

"tuning"— Optimize the existing input, output, and rule parameters without learning new rules."learning"— Learn new rules up to the maximum number of rules specified byNumMaxRules.

The "anfis" algorithm supports only "tuning"

optimization.

Maximum number of rules in a FIS after optimization, specified as an integer. The

number of rules in a FIS (after optimization) can be less than

NumMaxRules, since duplicate rules with the same antecedent values

are removed from the rule base.

When NumMaxRules is Inf,

tunefis sets NumMaxRules to the maximum

number of possible rules for the FIS. This maximum value is computed based on the number

of input variables and the number of membership functions for each input

variable.

When tuning the parameters of a fistree object,

NumMaxRules indicates the maximum number of rules for each FIS in

the fistree.

The "anfis" tuning method ignores this option.

Flag for ignoring invalid parameters, specified as either true or

false. When IgnoreInvalideParameters is

true, the tunefis function ignores invalid parameter values generated during the

tuning process.

The "anfis" tuning method ignores this option.

Type of distance metric used for computing the cost for the optimized parameter values with respect to the training data, specified as one of the following:

"rmse"— Root mean squared error"norm1"— Vector 1-norm"norm2"— Vector 2-norm

For more information on vector norms, see norm.

The "anfis" tuning method supports only the

"rmse" metric.

Flag for using parallel computing, specified as either true or

false. When UseParallel is

true, the tunefis function uses parallel computation in the optimization process.

Using parallel computing requires Parallel Computing Toolbox™ software.

The "anfis" tuning method does not support parallel

computation.

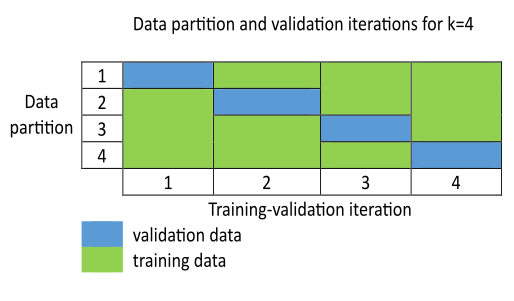

Number of cross validations to perform, specified as a nonnegative integer less than or equal to the number of rows in the training data.

When KFoldValue is 0 or

1, tunefis uses the entire input data set for

training and does not perform validation.

Otherwise, tunefis randomly partitions the input data into

KFoldValue subsets of approximately equal size. The function then

performs KFoldValue training-validation iterations. For each

iteration, one data subset is used as validation data with the remaining subsets used as

training data. The following figure shows the data partition and iterations for

KFoldValue = 4.

For an example that tunes a fuzzy inference system using k-fold cross validation, see Optimize FIS Parameters with K-Fold Cross-Validation.

The "anfis" tuning method ignores this option.

Maximum allowable increase in validation cost when using k-fold cross validation,

specified as a scalar value in the range [0,1]. A higher

ValidationTolerance value produces a longer training-validation

iteration, with an increased possibility of data overfitting.

The increase in validation cost, ΔC, is the difference between

the average validation cost and the minimum validation cost,

Cmin, for the current training-validation

iteration. The average validation cost is a moving average with a window size equal to

ValidationWindowSize.

tunefis stops the current training-validation iteration when

the ratio between ΔC and

Cmin exceeds

ValidationTolerance.

ValidationTolerance is ignored when

KFoldValue is 0 or

1.

The "anfis" tuning method ignores this option.

Window size for computing average validation cost, specified as a positive integer.

The validation cost moving average is computed over the last N

validation cost values, where N is equal to

ValidationWindowSize. A higher

ValidationWindowSize value produces a longer training-validation

iteration, with an increased possibility of data overfitting. A lower window size can

cause early termination of the tuning process when the training data is noisy.

ValidationWindowSize is ignored when

KFoldValue is 0 or

1.

The "anfis" tuning method ignores this option.

Data to display in command window during training, specified as one of the following values.

"all"— Display both training and validation results."tuningonly"— Display only training results."validationonly"— Display only validation results."none"— Display neither training nor validation results.

Examples

Create a default option set using the particle swarm tuning algorithm.

opt = tunefisOptions(Method="particleswarm")opt =

tunefisOptions with properties:

Method: "particleswarm"

MethodOptions: [1×1 optim.options.Particleswarm]

OptimizationType: "tuning"

NumMaxRules: Inf

IgnoreInvalidParameters: 1

DistanceMetric: "rmse"

UseParallel: 0

KFoldValue: 0

ValidationTolerance: 0.1000

ValidationWindowSize: 5

Display: "all"

You can modify the options using dot notation. For example, set the maximum number of iterations to 20.

opt.MethodOptions.MaxIterations = 20;

You can also specify other options when creating the option set. In this example, set the OptimizationType to "learning" to learn new rules.

opt2 = tunefisOptions(Method="particleswarm",OptimizationType="learning")

opt2 =

tunefisOptions with properties:

Method: "particleswarm"

MethodOptions: [1×1 optim.options.Particleswarm]

OptimizationType: "learning"

NumMaxRules: Inf

IgnoreInvalidParameters: 1

DistanceMetric: "rmse"

UseParallel: 0

KFoldValue: 0

ValidationTolerance: 0.1000

ValidationWindowSize: 5

Display: "all"

Version History

Introduced in R2019a

See Also

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Website auswählen

Wählen Sie eine Website aus, um übersetzte Inhalte (sofern verfügbar) sowie lokale Veranstaltungen und Angebote anzuzeigen. Auf der Grundlage Ihres Standorts empfehlen wir Ihnen die folgende Auswahl: .

Sie können auch eine Website aus der folgenden Liste auswählen:

So erhalten Sie die bestmögliche Leistung auf der Website

Wählen Sie für die bestmögliche Website-Leistung die Website für China (auf Chinesisch oder Englisch). Andere landesspezifische Websites von MathWorks sind für Besuche von Ihrem Standort aus nicht optimiert.

Amerika

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)