taylorPrunableNetwork

Description

A TaylorPrunableNetwork object enables support for compression

of neural networks using Taylor pruning.

This feature requires the Deep Learning Toolbox™ Model Compression Library support package. This support package is a free add-on that you can download using the Add-On Explorer. Alternatively, see Deep Learning Toolbox Model Compression Library.

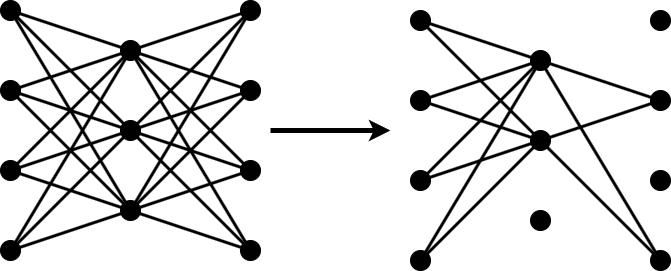

Pruning a neural network means removing the least important parameters to reduce the size of the network while preserving the quality of its predictions as much as possible.

Find the least important parameters in a pretrained network by iterating over these steps:

Determine the importance score of the prunable parameters and remove the least important parameters.

Retrain the updated network for several iterations.

Removing the least important parameters in each iteration of the pruning loop is

computationally expensive. Use a TaylorPrunableNetwork object to simulate

pruning by applying a pruning mask. Then, use the object functions to update the mask during

the pruning loop. Finally, update the network architecture by converting the network back to a

dlnetwork object.

For an example of the full pruning workflow, see Prune Image Classification Network Using Taylor Scores.

Creation

Description

Input Arguments

Properties

Object Functions

forward | Compute deep learning network output for training |

predict | Compute deep learning network output for inference |

updatePrunables | Remove filters from prunable layers based on importance scores |

updateScore | Compute and accumulate Taylor-based importance scores for pruning |

dlnetwork | Deep learning neural network |

Examples

Algorithms

Pruning a neural network means removing the least important parameters to reduce the size of the network while preserving the quality of its predictions.

You can measure the importance of a set of parameters by the change in loss after removal of the parameters from the network. If the loss changes significantly, then the parameters are important. If the loss does not change significantly, then the parameters are not important and can be pruned.

When you have a large number of parameters in your network, you cannot calculate the change in loss for all possible combinations of parameters. Instead, follow these steps to apply an iterative workflow.

Use an approximation to find and remove the least important parameter or the

nnumber of least important parameters.Fine-tune the new, smaller network by retraining it for several iterations.

Repeat steps 1 and 2 until you reach your compression goal.

To perform the approximation in step 1, calculate the Taylor expansion of the loss as a function of the individual network parameters. This method is called Taylor pruning.

For some types of layers, including convolutional layers, removing a parameter is equivalent to setting it to zero. In this case, the change in loss resulting from pruning a parameter θ can be expressed as

X is the training data of your network.

Calculate the Taylor expansion of the loss as a function of the parameter θ to first order using

Then, you can express the change of loss as a function of the gradient of the loss with respect to the parameter θusing

References

[1] Molchanov, Pavlo, Stephen Tyree, Tero Karras, Timo Aila, and Jan Kautz. "Pruning Convolutional Neural Networks for Resource Efficient Inference." arXiv, June 8, 2017. https://arxiv.org/abs/1611.06440.

Version History

Introduced in R2022aSee Also

predict | forward | updatePrunables | updateScore | dlnetwork