read

Description

[

returns a point cloud, distances from the sensor to object points, reflectivity of surface

materials, and the semantic identifiers of the objects in a scene. You can set the field of

view and angular resolution in the pointCloud,range,reflectivity,semantic] = read(lidar)sim3d.sensors.Lidar

object specified by lidar.

Examples

Since R2025a

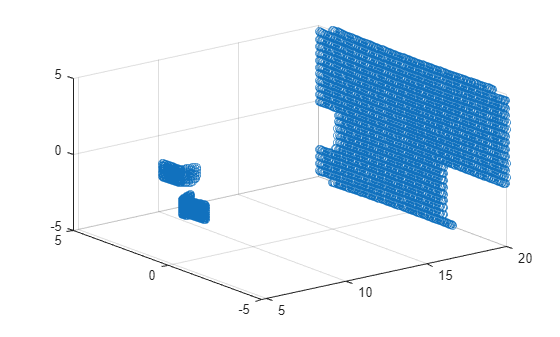

Create a lidar sensor in the 3D environment using the sim3d.sensors.Lidar object. You can extract the point cloud with the specified field of view and angular resolution and display it in MATLAB®. Use the read function to extract the point cloud data from the 3D environment. The point cloud is a collection of data points that represents objects in the 3D environment. You can use point cloud data to map a 3D environment.

Create a 3D environment and set up communication with the Unreal Engine simulation environment using the output function OutputImpl and the update function UpdateImpl. The sim3d.World object can send and receive data about the 3D environment to and from the Unreal Engine at each simulation step using output and update functions, respectively. Before the Unreal Engine simulates, MATLAB calls the output function and sends data to the Unreal Engine. Then, the Unreal Engine executes at each time step and sends data to MATLAB in the update function. You can use the update function to read this data or change values after each simulation step.

world = sim3d.World(Output=@OutputImpl,Update=@UpdateImpl);

Create a box actor Cube1 in the 3D environment using the sim3d.Actor object and add the box to the world.

cube1 = sim3d.Actor( ... ActorName="Cube1", ... Mobility=sim3d.utils.MobilityTypes.Movable); createShape(cube1,"box"); cube1.Color = [1 0 0]; cube1.Translation = [5.30 1.10 -0.80]; add(world,cube1);

Create a box actor Cube2 in the 3D environment using the sim3d.Actor object and add the box to the world.

cube2 = sim3d.Actor( ... ActorName="Cube2", ... Mobility=sim3d.utils.MobilityTypes.Movable); cube2.Translation = [8.00 -2.20 -0.60]; createShape(cube2,"box"); cube2.Color = [0 1 0]; add(world,cube2);

Create a plane actor Plane1 in the 3D environment using the sim3d.Actor object and add the plane to the world.

plane1 = sim3d.Actor( ... ActorName="Plane1", ... Mobility=sim3d.utils.MobilityTypes.Movable); plane1.Translation = [20 0 0]; plane1.Rotation = [0 -pi/2 0]; plane1.Scale = [10 10 1]; createShape(plane1,"plane"); add(world,plane1);

Create a lidar object using the sim3d.sensors.Lidar object. Add the lidar to the world.

lidar = sim3d.sensors.Lidar(ActorName="Lidar");

add(world,lidar);Run the co-simulation.

sampletime = 1/60; stoptime = 10; run(world,sampletime,stoptime);

Output Function

The output function sends data about the actor to the Unreal Engine environment at each simulation step. For this example, the function rotates the Cube about its Z-axis by updating the Rotation property of the Cube at each simulation step.

function OutputImpl(world) world.Actors.Cube1.Rotation(3) = world.Actors.Cube1.Rotation(3) ... + 0.002; end

Update Function

The update function reads data from the Unreal Engine environment at each simulation step. For this example, the update function uses the read function of the sim3d.sensors.Lidar object to get the point cloud data from the Lidar in the Unreal Engine environment. Use the scatter3 function to visualize the point cloud data in MATLAB.

function UpdateImpl(world) [pc,~] = read(world.Actors.Lidar); [m,n,~] = size(pc); points = reshape(pc,m*n,3); scatter3(points(:,1), points(:,2), points(:,3)); end

Input Arguments

Virtual lidar sensor that detects target in the 3D environment, specified as a

sim3d.sensors.Lidar object.

Example: lidar = sim3d.sensors.Lidar

Output Arguments

Point cloud data, returned as an m-by-n-by 3 array of positive, real-valued [x, y, z] points. m and n define the number of points in the point cloud, as shown in this equation:

where:

VFOV is the vertical field of view of the lidar, in degrees, as specified by the

VerticalFieldOfViewargument.VRES is the vertical angular resolution of the lidar, in degrees, as specified by the

VerticalAngularResolutionargument.HFOV is the horizontal field of view of the lidar, in degrees, as specified by the

HorizontalFieldOfViewargument.HRES is the horizontal angular resolution of the lidar, in degrees, as specified by the

HorizontalAngularResolutionargument.

Each m-by-n entry in the array specifies the

x, y, and z coordinates of a

detected point in the sensor coordinate system. If the lidar does not detect a point at

a given coordinate, then x, y, and

z are returned as NaN.

You can create a point cloud from these returned points by using point cloud

functions in the pointCloud (Computer Vision Toolbox) object.

Data Types: single

Distance to object points measured by the lidar sensor, returned as an

m-by-n positive real-valued matrix. Each

m-by-n value in the matrix corresponds to an

[x, y, z] coordinate point

returned by the pointCloud output argument.

Data Types: single

Reflectivity of surface materials, returned as an

m-by-n matrix of intensity values in the range

[0, 1], where m is the number of rows in the point cloud and

n is the number of columns. Each point in the

reflectivity output corresponds to a point in the

pointCloud output. The function returns points that are not part

of a surface material as NaN.

To calculate reflectivity, the lidar sensor uses the Phong reflection model [1]. The model describes surface reflectivity as a combination of diffuse reflections (scattered reflections, such as from rough surfaces) and specular reflections (mirror-like reflections, such as from smooth surfaces).

Data Types: single

Semantic label identifier for each pixel in the image, output as an m-by-n array of RGB triplet values. m is the vertical resolution of the image. n is the horizontal resolution of the image.

The table shows the object IDs used in the default scenes. If a scene contains an

object that does not have an assigned ID, that object is assigned an ID of

0. The detection of lane markings is not supported.

| ID | Type |

|---|---|

0 | None/default |

1 | Building |

2 | Not used |

3 | Other |

4 | Pedestrians |

5 | Pole |

6 | Lane Markings |

7 | Road |

8 | Sidewalk |

9 | Vegetation |

10 | Vehicle |

11 | Not used |

12 | Generic traffic sign |

13 | Stop sign |

14 | Yield sign |

15 | Speed limit sign |

16 | Weight limit sign |

17-18 | Not used |

19 | Left and right arrow warning sign |

20 | Left chevron warning sign |

21 | Right chevron warning sign |

22 | Not used |

23 | Right one-way sign |

24 | Not used |

25 | School bus only sign |

26-38 | Not used |

39 | Crosswalk sign |

40 | Not used |

41 | Traffic signal |

42 | Curve right warning sign |

43 | Curve left warning sign |

44 | Up right arrow warning sign |

45-47 | Not used |

48 | Railroad crossing sign |

49 | Street sign |

50 | Roundabout warning sign |

51 | Fire hydrant |

52 | Exit sign |

53 | Bike lane sign |

54-56 | Not used |

57 | Sky |

58 | Curb |

59 | Flyover ramp |

60 | Road guard rail |

| 61 | Bicyclist |

62-66 | Not used |

67 | Deer |

68-70 | Not used |

71 | Barricade |

72 | Motorcycle |

73-255 | Not used |

Dependencies

To enable semantic output, set the EnableSemanticOutput argument of the

sim3d.sensors.Camera object to 1.

References

[1] Phong, Bui Tuong. “Illumination for Computer Generated Pictures.” Communications of the ACM 18, no. 6 (June 1975): 311–17. https://doi.org/10.1145/360825.360839.

Version History

Introduced in R2024b

See Also

sim3d.sensors.Lidar | sim3d.World | sim3d.Actor | pointCloud (Computer Vision Toolbox) | pcplayer (Computer Vision Toolbox)

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Website auswählen

Wählen Sie eine Website aus, um übersetzte Inhalte (sofern verfügbar) sowie lokale Veranstaltungen und Angebote anzuzeigen. Auf der Grundlage Ihres Standorts empfehlen wir Ihnen die folgende Auswahl: .

Sie können auch eine Website aus der folgenden Liste auswählen:

So erhalten Sie die bestmögliche Leistung auf der Website

Wählen Sie für die bestmögliche Website-Leistung die Website für China (auf Chinesisch oder Englisch). Andere landesspezifische Websites von MathWorks sind für Besuche von Ihrem Standort aus nicht optimiert.

Amerika

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)