metric.Result

Description

A metric.Result object contains the metric data for a specified

metric algorithm that traces to the specified unit.

Creation

Description

metric_result = metric.Result

Alternatively, if you collect results by executing a metric.Engine

object, using the getMetrics function on the engine object returns the

collected metric.Result objects in an array.

Properties

User data provided by the metric algorithm, returned as a string.

Result value of the metric for specified algorithm and artifacts, returned as an integer, string, double vector, or structure. For a list of metrics and their result values, see Design Cost Model Metrics and Model Testing Metrics (Simulink Check).

Metric identifier for metric algorithm that calculates results, returned as a string.

Example:

'DataSegmentEstimate'

Testing artifacts for which metric is calculated, returned as a structure or an array of structures. For each artifact that the metric analyzes, the returned structure contains these fields:

UUID— Unique identifier of artifactName— Name of artifactParentUUID— Unique identifier of file that contains artifactParentName— Name of the file that contains artifact

Scope of metric results, returned as a structure. The scope is the unit for which the metric collected results. The structure contains these fields:

UUID— Unique identifier of unitName— Name of unitParentUUID— Unique identifier of file that contains unitParentName— Name of file that contains unit

Since R2025a

Errors and warnings from metric collection, returned as a structure or an array of structures. Each structure contains these fields:

Severity— Severity of the diagnostic message, returned as either"ERROR"or"WARNING".Identifier— Identifier for the diagnostic message.Message— Diagnostic message.

Data Types: struct

Examples

Use a metric.Engine object to collect design cost

metric data on a model reference hierarchy in a project.

To open the project, enter this command.

openExample('simulink/VisualizeModelReferenceHierarchiesExample')The project contains sldemo_mdlref_depgraph, which is the top-level

model in a model reference hierarchy. This model reference hierarchy represents one

design unit.

Create a metric.Engine object.

metric_engine = metric.Engine();

Update the trace information for metric_engine to reflect

any pending artifact changes.

updateArtifacts(metric_engine)

Create an array of metric identifiers for the metrics you want to collect. For this example, create a list of all available design cost estimation metrics.

metric_Ids = getAvailableMetricIds(metric_engine,... 'App','DesignCostEstimation')

metric_Ids =

1×2 string array

"DataSegmentEstimate" "OperatorCount"To collect results, execute the metric engine.

execute(metric_engine,metric_Ids);

Because the engine was executed without the argument for

ArtifactScope, the engine collects metrics for the

sldemo_mdlref_depgraph model reference hierarchy.

Use the getMetrics function to access the high-level design cost

metric results.

results_OperatorCount = getMetrics(metric_engine,'OperatorCount'); results_DataSegmentEstimate = getMetrics(metric_engine,'DataSegmentEstimate'); disp(['Unit: ', results_OperatorCount.Artifacts.Name]) disp(['Total Cost: ', num2str(results_OperatorCount.Value)]) disp(['Unit: ', results_DataSegmentEstimate.Artifacts.Name]) disp(['Data Segment Size (bytes): ', num2str(results_DataSegmentEstimate.Value)])

Unit: sldemo_mdlref_depgraph Total Cost: 57 Unit: sldemo_mdlref_depgraph Data Segment Size (bytes): 228

The results show that for the sldemo_mdlref_depgraph model, the

estimated total cost of the design is 57 and the estimated data segment size is 228

bytes.

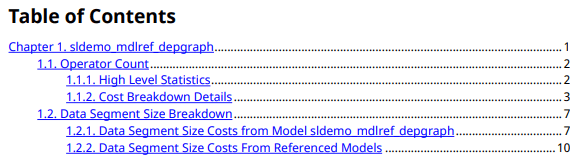

Use the generateReport function to access detailed metric

results in a pdf report. Name the report

'MetricResultsReport.pdf'.

reportLocation = fullfile(pwd,'MetricResultsReport.pdf'); generateReport(metric_engine,... 'App','DesignCostEstimation',... 'Type','pdf',... 'Location',reportLocation);

The report contains a detailed breakdown of the operator count and data segment estimate metric results.

Version History

Introduced in R2022ametric.Result objects return diagnostic errors and warnings that

occurred during metric collection by using the Diagnostics

property.

metric.Result objects do not return the fields Type

and ParentType for the properties Artifacts and

Scope.

See Also

metric.Engine | execute | getMetrics | Design Cost Model Metrics

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Website auswählen

Wählen Sie eine Website aus, um übersetzte Inhalte (sofern verfügbar) sowie lokale Veranstaltungen und Angebote anzuzeigen. Auf der Grundlage Ihres Standorts empfehlen wir Ihnen die folgende Auswahl: .

Sie können auch eine Website aus der folgenden Liste auswählen:

So erhalten Sie die bestmögliche Leistung auf der Website

Wählen Sie für die bestmögliche Website-Leistung die Website für China (auf Chinesisch oder Englisch). Andere landesspezifische Websites von MathWorks sind für Besuche von Ihrem Standort aus nicht optimiert.

Amerika

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)