Fuse Prerecorded Lidar and Camera Data to Generate Vehicle Track List for Scenario Generation

This example shows how to fuse prerecorded lidar and camera object detections to create a smoothed vehicle track list that you can use to generate scenarios.

Track lists are crucial for interpreting the position and trajectories of the vehicles in a scenario. When you generate a track list, you can achieve better vehicle localization by using multiple sensor data points instead of data from a single sensor. This example uses a joint integrated data association (JIPDA) smoother to generate a smoothed track list from prerecorded lidar data and camera object detections.

In this example, you:

Load and visualize lidar and camera data and their prerecorded object detections.

Initialize a JIPDA smoother by using properties of a

trackerJPDA(Sensor Fusion and Tracking Toolbox) System object™.Fuse the camera and lidar detections and generate smoothed tracks by using the

smooth(Sensor Fusion and Tracking Toolbox) function.Visualize the detections and smoothed tracks.

Convert the smoothed tracks from the

objectTrack(Sensor Fusion and Tracking Toolbox) format to theactorTracklistformat, which you can use to generate a scenario. To create a RoadRunner scenario from an actor track list, see Generate RoadRunner Scenario from Recorded Sensor Data example.

Load and Visualize Sensor Data and Prerecorded Object Detections

This example requires Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed, if it is not installed, install it using the Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing a subset of sensor data and prerecorded object detections from the PandaSet data set. The ZIP file contains several MAT files. Each MAT file contains this sensor data with a timestamp.

Lidar data — Contains lidar point clouds and prerecorded object detections as 3D bounding boxes. A pretrained

pointPillarsObjectDetector(Lidar Toolbox) object was used to detect these bounding boxes. For more information, see Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation.Camera data — Contains forward-facing camera images and prerecorded object detections as 2D bounding boxes. A pretrained

yolov4ObjectDetectorwas used to detect these bounding boxes. For more information, see Extract Vehicle Track List from Recorded Camera Data for Scenario Generation.

Load the file dataLog into the workspace, which contains the first frame of the data.

dataFolder = tempdir; dataFileName = "PandasetLidarCameraData.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFileName; filePath = fullfile(dataFolder,dataFileName); if ~isfile(filePath) websave(filePath,url); end unzip(filePath,dataFolder); dataPath = fullfile(dataFolder,"PandasetLidarCameraData") ; fileName = fullfile(dataPath,strcat(num2str(1,"%03d"),".mat")); load(fileName,"dataLog");

Read the point cloud data and object detections from dataLog by using the helperGetLidarData function.

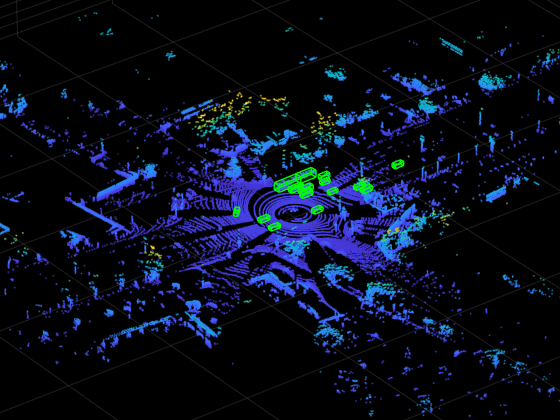

[ptCld,lidarBboxes] = helperGetLidarData(dataLog); ax = pcshow(ptCld);

Display the detected 3D bounding boxes on point cloud data.

showShape("Cuboid",lidarBboxes,Color="green",Parent=ax,Opacity=0.15,LineWidth=1); zoom(ax,4)

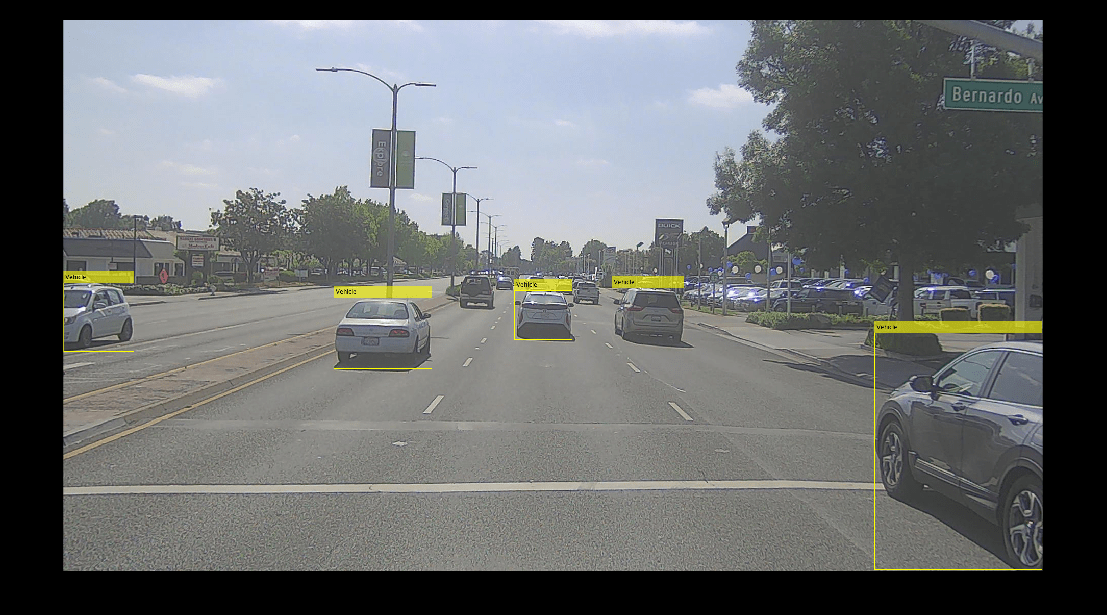

Read the image and object detections from dataLog by using the helperGetCameraData function.

[img,cameraBBoxes] = helperGetCameraData(dataLog,dataFolder);

Annotate the images with the detected 2D bounding boxes.

img = insertObjectAnnotation(img,"Rectangle",cameraBBoxes,"Vehicle"); imshow(img)

Initialize Tracker

Construct a trackerJPDA (Sensor Fusion and Tracking Toolbox) object by specifying the filter initialization function property to the helperInitLidarCameraFusionFilter function. This function returns an extended Kalman filter to track 3D bounding box detections from lidar data and 2D bounding box detections from camera data. For more information on trackerJPDA properties, see trackerJPDA (Sensor Fusion and Tracking Toolbox) System object™.

For more information on the tracking algorithm, see Object-Level Fusion of Lidar and Camera Data for Vehicle Tracking (Sensor Fusion and Tracking Toolbox).

Construct a trackerJPDA object.

tracker = trackerJPDA( ... TrackLogic="Integrated", ... FilterInitializationFcn=@helperInitLidarCameraFusionFilter, ... AssignmentThreshold=[20 200], ... MaxNumTracks=500, ... DetectionProbability=0.95, ... MaxNumEvents=50, ... ClutterDensity=1e-7, ... NewTargetDensity=1e-7, ... ConfirmationThreshold=0.99, ... DeletionThreshold=0.2, ... DeathRate=0.5);

Determine the start time using the first frame of dataLog.

startTime = dataLog.LidarData.Timestamp;

Specify the total number of frames to track from the data set.

numFrames = 80;

Compute the simulation time of the tracker based on the start time and stop time of dataLog.

fileName = fullfile(dataPath,strcat(num2str(numFrames,"%03d"),".mat")); load(fileName,"dataLog"); stopTime = dataLog.LidarData.Timestamp; simTime = stopTime - startTime;

Create a smootherJIPDA (Sensor Fusion and Tracking Toolbox) object by reusing the detection-to-track assignment properties of the trackerJPDA (Sensor Fusion and Tracking Toolbox) object. The smoother fuses the forward predicted tracks with backward predicted tracks to get the smooth track predictions.

smoother = smootherJIPDA(tracker);

Specify the parameters of the JIPDA smoother.

smoother.MaxNumDetectionAssignmentEvents = 100; % Maximum number of detection assignment events smoother.MaxNumTrackAssignmentEvents = 100; % Maximum number of backward track assignment events smoother.AnalysisFcn = @(x)helperShowProgress(x,simTime); % Specify a helper function to display progress bar

Set Up Detections

To fuse the camera and lidar object detections using the JIPDA smoother, you must first set up the detections.

Convert 2D bounding box detections of camera data to

trackerJPDA(Sensor Fusion and Tracking Toolbox) format by using thehelperAssembleCameraDetectionsfunction.Convert 3D bounding box detections of lidar data to

trackerJPDA(Sensor Fusion and Tracking Toolbox) format by using thehelperAssembleLidarDetectionsfunction.

% Initialize variables to store lidar and camera detections. lidarDetections = cell(1,numFrames); cameraDetections = cell(1,numFrames); detectionLog = []; for frame = 1:numFrames % Load the frame specific data. fileName = fullfile(dataPath,strcat(num2str(frame,"%03d"),".mat")); load(fileName,"dataLog"); % Update the simulation time. time = dataLog.LidarData.Timestamp - startTime; % Convert 3D bounding box detections of lidar data to the objectDetection format. [~,lidarBoxes,lidarPose] = helperGetLidarData(dataLog); lidarDetections{frame} = helperAssembleLidarDetections(lidarBoxes,lidarPose,time,1); % Convert 2D bounding box detections of camera data to the objectDetection format. [~,cameraBoxes,cameraPose] = helperGetCameraData(dataLog,dataFolder); cameraDetections{frame} = helperAssembleCameraDetections(cameraBoxes,cameraPose,time,2); % Concatenate lidar and camera detections. if frame == 1 detections = lidarDetections{frame}; else detections = [lidarDetections{frame};cameraDetections{frame}]; end % Assemble detections for smoothing. detectionLog = [detectionLog(:);detections(:)]; end

Display the first eight entries of the detection log.

head(detectionLog)

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

{1×1 objectDetection}

Fuse Camera and Lidar Detections Using a Smoother

Fuse the camera and lidar detections by using the smooth (Sensor Fusion and Tracking Toolbox) function of the smootherJIPDA (Sensor Fusion and Tracking Toolbox) System object™. This function returns smoothed tracks as a cell array containing objectTrack (Sensor Fusion and Tracking Toolbox) objects.

smoothTracks = smooth(smoother,detectionLog);

Display the first eight entries of the smoothed tracks.

head(smoothTracks)

{15×1 objectTrack}

{15×1 objectTrack}

{16×1 objectTrack}

{17×1 objectTrack}

{17×1 objectTrack}

{17×1 objectTrack}

{17×1 objectTrack}

{17×1 objectTrack}

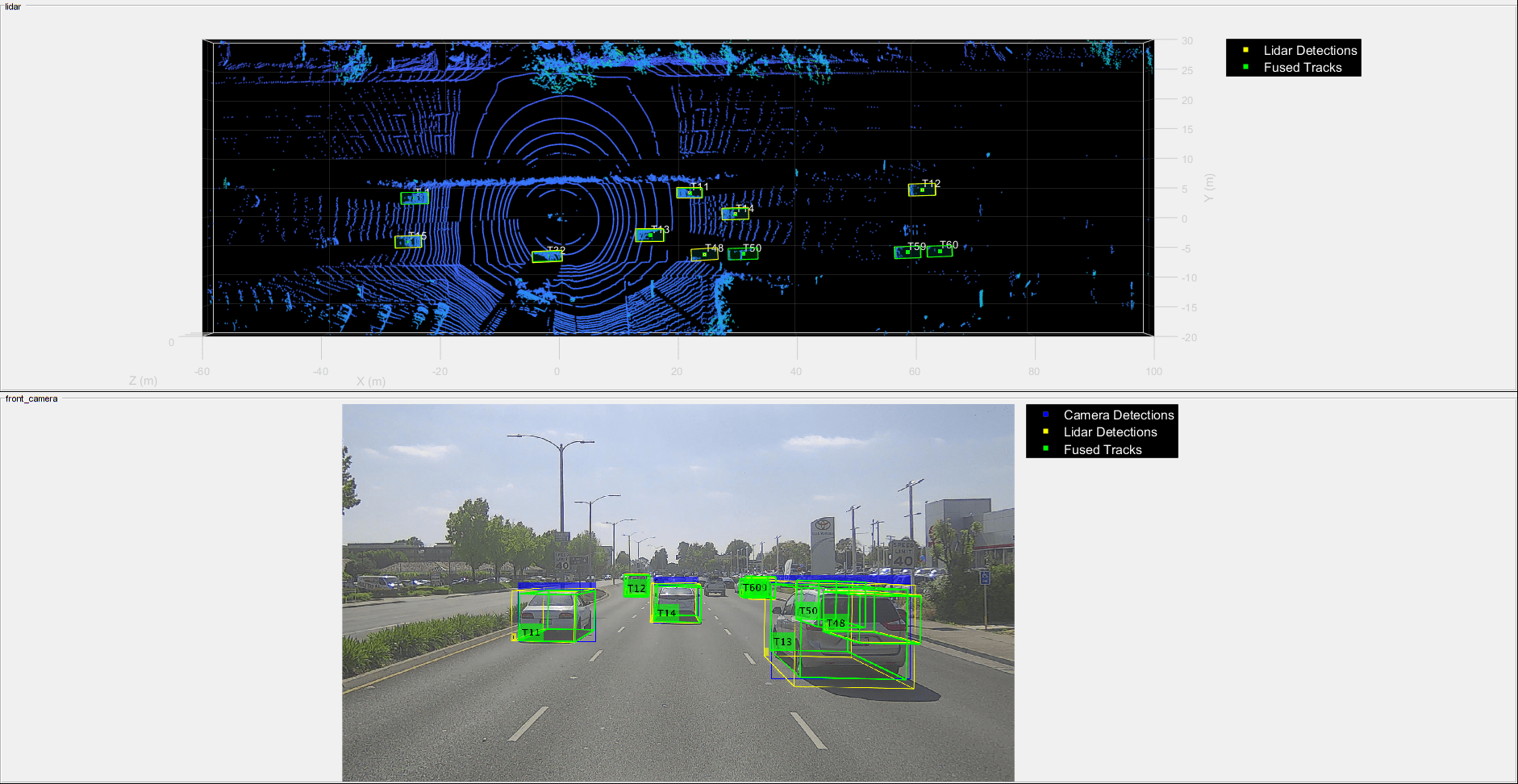

Visualize Detections and Fused Tracks

Visualize the smoothed tracks by using the helperLidarCameraFusionWithSmoothingDisplay helper class. This visualization shows the lidar detections, camera detections, and fused tracks overlaid on the lidar point cloud data and camera images.

display = helperLidarCameraFusionWithSmoothingDisplay; for frame = 1:numFrames % Load the frame specific data. fileName = fullfile(dataPath,strcat(num2str(frame,"%03d"),".mat")); load(fileName,"dataLog"); % Extract tracks for the current frame. tracks= smoothTracks{frame}; % Update visualization for the current frame. display(dataFolder,dataLog,lidarDetections{frame},cameraDetections{frame},tracks); drawnow end

Format Track List

Convert the smoothed tracks from the objectTrack (Sensor Fusion and Tracking Toolbox) format to the actorTracklist format by using the helperObjectTrack2ActorTrackList function.

You can use the track data in the actorTracklist format to create actor trajectories. For more information on how to create a RoadRunner scenario from an actor track list, see Generate RoadRunner Scenario from Recorded Sensor Data.

tracklist = helperObjectTrack2ActorTrackList(smoothTracks)

tracklist =

actorTracklist with properties:

TimeStamp: [80×1 double]

TrackIDs: {80×1 cell}

ClassIDs: {80×1 cell}

Position: {80×1 cell}

Dimension: {80×1 cell}

Orientation: {80×1 cell}

Velocity: {80×1 cell}

Speed: {80×1 cell}

StartTime: 0

EndTime: 7.8995

NumSamples: 80

UniqueTrackIDs: [27×1 string]

Helper Function

The helperObjectTrack2ActorTrackList helper function converts tracks from the objectTrack (Sensor Fusion and Tracking Toolbox) format to the actorTracklist format.

function tracklist = helperObjectTrack2ActorTrackList(allTracks) % Convert tracks from the objectTrack format to the actorTrackList format. % Initialize an actorTracklist object. tracklist = actorTracklist; % Specify indices to extract data from the track state. PositionIndex = [1 3 6]; VelocityIndex = [2 4 7]; DimensionIndex = [9 10 11]; YawIndex = 8; % Iterate over all tracks and add to the list. for i = 1:numel(allTracks) % Get current track tracks = allTracks{i}; % Extract the track parameters. timeStamp = [tracks.UpdateTime]'; trackIds = string([tracks.TrackID]'); classIds = [tracks.ObjectClassID]'; pos = arrayfun(@(x)x.State(PositionIndex)',tracks,UniformOutput=false); vel = arrayfun(@(x)x.State(VelocityIndex)',tracks,UniformOutput=false); speed = arrayfun(@(x)norm(x.State(VelocityIndex)'),tracks,UniformOutput=false); dim = arrayfun(@(x)x.State(DimensionIndex)',tracks,UniformOutput=false); orient = arrayfun(@(x)[x.State(YawIndex) 0 0],tracks,UniformOutput=false); % Add data to the actorTrackList object. tracklist.addData(timeStamp,trackIds,classIds,pos,Velocity=vel,Dimension=dim,Orientation=orient,Speed=speed); end end

See Also

Functions

getMapROI|roadprops|selectActorRoads|updateLaneSpec|actorprops|pointCloud|monoCamera|smootherJIPDA(Sensor Fusion and Tracking Toolbox) |trackingEKF

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

- Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation

- Generate RoadRunner Scenario from Recorded Sensor Data

- Generate Scenario from Actor Track Data and GPS Data

- Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation

- Extract Lane Information from Recorded Camera Data for Scene Generation