detect

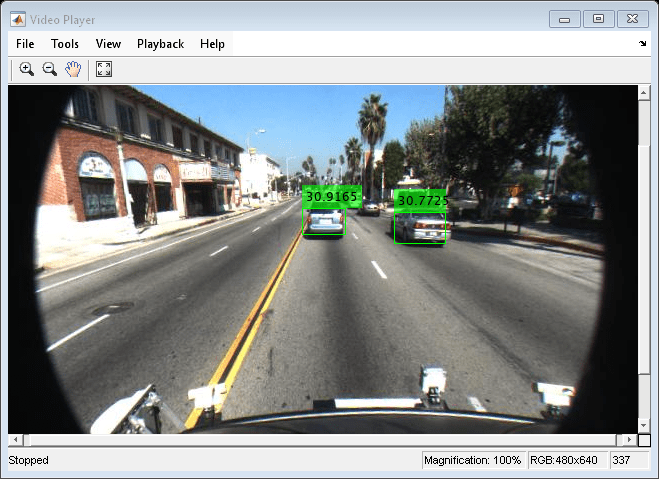

Detect objects using ACF object detector configured for monocular camera

Syntax

Description

[___] = detect(___,

specifies options using one or more name-value arguments. For example,

Name=Value)detect(detector,I,WindowStride=2) sets the stride of the sliding

window used to detect objects to 2.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Version History

Introduced in R2017a