Code Generation for Convolutional LSTM Network

This example shows how to generate a MEX function for a deep learning network that contains the convolutional and bidirectional long short-term memory (BiLSTM) layers. The generated function does not use any third-party libraries. The generated MEX function reads the data from a specified video file as a sequence of video frames and outputs a label that classifies the activity in the video. For more information on the training of this network, see the example Classify Videos Using Deep Learning (Deep Learning Toolbox). For more information about supported compilers, see Prerequisites for Deep Learning with MATLAB Coder.

This example is supported on Mac®, Linux® and Windows® platforms. It is not supported for MATLAB® Online™.

Prepare Input Video

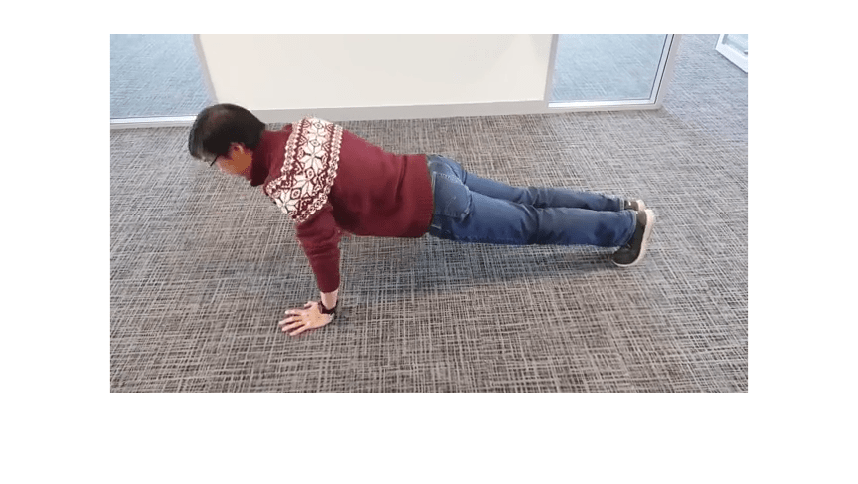

Read the video file pushup.mp4 by using the readvideo helper function. To view the video, loop over the individual frames of the video file and use the imshow function.

filename = "pushup.mp4"; video = readVideo(filename); numFrames = size(video,4); figure for i = 1:numFrames frame = video(:,:,:,i); imshow(frame/255); drawnow end

Center-crop the input video frames to the input size of the trained network by using the centerCrop helper function.

inputSize = [224 224 3]; video = centerCrop(video,inputSize);

The video_classify Entry-Point Function

The video_classify.m entry-point function takes image sequences and passes it to a trained network for prediction. This function uses the convolutional LSTM network from the example Classify Videos Using Deep Learning (Deep Learning Toolbox). The function loads the network object from the net.mat file into a persistent variable and then uses the classify (Deep Learning Toolbox) function to perform the prediction. On subsequent calls, the function reuses the persistent object.

type('video_classify.m')function out = video_classify(in) %#codegen

% Copyright 2021-2024 The MathWorks, Inc.

% A persistent object dlnet is used to load the dlnetwork object. At the

% first call to this function, the persistent object is constructed and

% setup. When the function is called subsequent times, the same object is

% reused to call predict on inputs, thus avoiding reconstructing and

% reloading the network object. A categorial arrary labels is also loaded

persistent dlnet;

persistent labels;

if isempty(dlnet)

dlnet = coder.loadDeepLearningNetwork('dlnet.mat');

labels = coder.load('labels.mat');

end

% The dlnetwork object require dlarrays as inputs, convert input to a

% dlarray

dlIn = dlarray(in, 'SSCT');

% pass input to network and perform prediction

dlOut = predict(dlnet, dlIn);

scores = extractdata(dlOut);

classNames = labels.classNames;

% Convert prediction scores to labels

out = scores2label(scores,classNames,1);

Download the Pretrained Network

Run the downloadVideoClassificationNetwork helper function to download the video classification network and save the network in the MAT file net.mat.

downloadVideoClassificationNetwork();

Generate MEX Function

To generate a MEX function, create a coder.MexCodeConfig object named cfg. Set the TargetLang property of cfg to C++. To generate code that does not use any third-party libraries, use the coder.DeepLearningConfig function by setting the targetlib to none. Assign it to the DeepLearningConfig property of the cfg object.

cfg = coder.config('mex'); cfg.TargetLang = 'C++'; cfg.DeepLearningConfig = coder.DeepLearningConfig('none');

Use the coder.typeof function to specify the type and size of the input argument to the entry-point function. In this example, the input is of single type with size of 224-by-224-by-3 and a variable sequence length.

Input = coder.typeof(single(0),[224 224 3 Inf],[false false false true]);

Generate a MEX function by running the codegen command.

codegen -config cfg video_classify -args {Input} -report

Code generation successful: View report

Run Generated MEX Function

Run the generated MEX function with the center-cropped video input.

output = video_classify_mex(single(video))

output = categorical

pushup

Overlay the prediction on to the input video.

video = readVideo(filename); numFrames = size(video,4); figure for i = 1:numFrames frame = video(:,:,:,i); frame = insertText(frame, [1 1], char(output), 'TextColor', [255 255 255],'FontSize',30, 'BoxColor', [0 0 0]); imshow(frame/255); drawnow end

Helper Function

This readVideo helper function reads a video file, either in MATLAB or a Jetson™ device, and returns it as a 4-D array.

function video = readVideo(filename, frameSize) if coder.target('MATLAB') vr = VideoReader(filename); else hwobj = jetson(); vr = VideoReader(hwobj, filename, 'Width', frameSize(1), 'Height', frameSize(2)); end H = vr.Height; W = vr.Width; C = 3; % Preallocate video array numFrames = floor(vr.Duration * vr.FrameRate); video = zeros(H,W,C,numFrames); % Read frames i = 0; while hasFrame(vr) i = i + 1; video(:,:,:,i) = readFrame(vr); end % Remove unallocated frames if size(video,4) > i video(:,:,:,i+1:end) = []; end end

The centerCrop helper function crops a video to a square based on its orientation and resizes it to a specified input size.

function videoResized = centerCrop(video,inputSize) % Copyright 2020-2021 The MathWorks, Inc. sz = size(video); videoTmp = video; if sz(1) < sz(2) % Video is landscape idx = floor((sz(2) - sz(1))/2); videoTmp(:,1:(idx-1),:,:) = []; videoTmp(:,(sz(1)+1):end,:,:) = []; elseif sz(2) < sz(1) % Video is portrait idx = floor((sz(1) - sz(2))/2); videoTmp(1:(idx-1),:,:,:) = []; videoTmp((sz(2)+1):end,:,:,:) = []; end videoResized = imresize(videoTmp,inputSize(1:2)); videoResized = reshape(videoResized, inputSize(1), inputSize(2), inputSize(3), []); end

See Also

coder.DeepLearningConfig | coder.typeof | codegen