Getting Started with YOLO v2

The you-only-look-once (YOLO) v2 object detector uses a single stage object detection network. YOLO v2 is faster than two-stage deep learning object detectors, such as regions with convolutional neural networks (Faster R-CNNs).

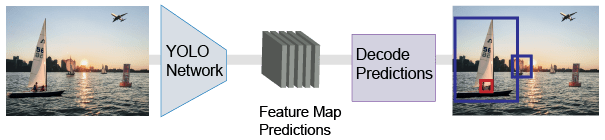

The YOLO v2 model runs a deep learning CNN on an input image to produce network predictions. The object detector decodes the predictions and generates bounding boxes.

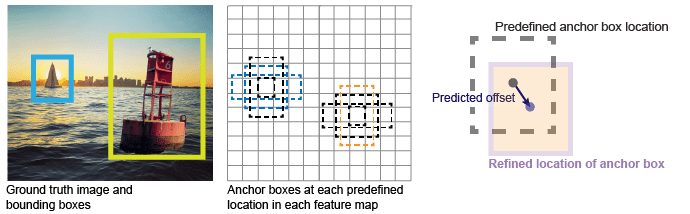

YOLO v2 uses anchor boxes to detect classes of objects in an image. For more details, see Anchor Boxes for Object Detection. YOLO v2 predicts these three attributes for each anchor box:

Intersection over union (IoU) — Predicts the objectness score of each anchor box.

Anchor box offsets — Refine the anchor box position

Class probability — Predicts the class label assigned to each anchor box.

The figure shows predefined anchor boxes (the dotted lines) at each location in a feature map and the refined location after offsets are applied. Matched boxes with a class are in color.

Detect Objects Using Pretrained YOLO v2 Detector

To detect objects using a pretrained YOLO v2 network, first create a yolov2ObjectDetector object by specifying the name of a supported

pretrained network. Pretrained networks include:

darknet19-coco— a YOLO v2 network based on DarkNet-19.tiny-yolov2-coco— a tiny YOLO v2 network with fewer filters and fewer convolutional layers.

Both of these networks are trained on the COCO data set and can detect 80 classes from the COCO data set. To download these YOLO v2 pretrained networks, you must install the Computer Vision Toolbox™ Model for YOLO v2 Object Detection support package.

After you create the pretrained YOLO v2 object detector, detect objects using the

detect

function.

Perform Transfer Learning Using Pretrained YOLO v2 Detector

You perform transfer learning on a pretrained YOLO v2 detector. Create a pretrained

yolov2ObjectDetector object by specifying the name of a network

("darknet19-coco" or "tiny-yolov2-coco"), or

by specifying a dlnetwork object containing a pretrained YOLO v2

network. To configure the YOLO v2 network for transfer learning, also specify the anchor

boxes and the new object classes. If you want the network to perform multiscale

training, then specify multiple training image sizes by setting the

TrainingImageSizes property.

To perform transfer learning using the pretrained YOLO v2 detector and labeled

training data, use the trainYOLOv2ObjectDetector function.

After you perform transfer learning, detect objects using the detect

function.

Train Custom YOLO v2 Network

If you require more control over the YOLO v2 network architecture, then you can create a custom YOLO v2 network. You can convert a pretrained feature extraction network into a custom YOLO v2 network by following these steps. For an example, see Create Custom YOLO v2 Object Detection Network.

Load a pretrained CNN, such as MobileNet v2, by using the

imagePretrainedNetwork(Deep Learning Toolbox) function.Select a feature extraction layer from the base network. This layer is the input to the detection head.

Create a

yolov2ObjectDetectorobject, specifying the base feature extraction network and the name of the feature extraction layer. Also, specify the names of the classes and the anchor boxes as inputs for training the network. You can set additional properties to adjust the behavior of the YOLO v2 object detector:If you want the network to perform multiscale training, then specify multiple training image sizes by setting the

TrainingImageSizeproperty.If you want to improve the detection accuracy for small objects, then add a reorganization branch by specifying the

ReorganizeLayerSourceproperty.

The

yolov2ObjectDetectorobject deletes all of the layers from the base network after the feature extraction layer. The object also assembles a detection head that ends with a depth concatenation layer and ayolov2TransformLayerthat transforms the raw CNN output into a form required to produce object detections. Finally, the object connects the output of the feature extraction layer to the detection head. The figure shows the layers of the detection head.When you optionally include a reorganization branch, the object adds a

spaceToDepthLayeranddepthConcatenationLayer(Deep Learning Toolbox) to the network. The space-to-depth layer extracts low-level features from the base network. The depth concatenation layer combines the high-level features from the detection head with the low-level features. The figure shows a modified network that includes a reorganization branch.

Tip

Starting with a pretrained network is typically much faster and easier than

assembling a base network layer by layer. However, if you need to assemble the base

network, than you can use the interactive Deep Network

Designer (Deep Learning Toolbox) app, or functions such as addLayers (Deep Learning Toolbox) and connectLayers (Deep Learning Toolbox).

After you create the custom YOLO v2 network, train the network on a labeled data set

by using the trainYOLOv2ObjectDetector function. For an example, see Object Detection Using YOLO v2 Deep Learning.

After you train the network, detect objects using the detect

function.

Code Generation

To learn how to generate CUDA® code using the YOLO v2 object detector (created using the yolov2ObjectDetector object) see Code Generation for Object Detection by Using YOLO v2.

Label Training Data for Deep Learning

You can use the Image Labeler,

Video Labeler,

or Ground Truth Labeler (Automated Driving Toolbox) apps to interactively

label pixels and export label data for training. The apps can also be used to label

rectangular regions of interest (ROIs) for object detection, scene labels for image

classification, and pixels for semantic segmentation. To create training data from any

of the labelers exported ground truth object, you can use the objectDetectorTrainingData or pixelLabelTrainingData functions. For more details, see Training Data for Object Detection and Semantic Segmentation.

References

[1] Redmon, Joseph, and Ali Farhadi. “YOLO9000: Better, Faster, Stronger.” In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6517–25. Honolulu, HI: IEEE, 2017. https://doi.org/10.1109/CVPR.2017.690.

[2] Redmon, Joseph, Santosh Divvala, Ross Girshick, and Ali Farhadi. "You only look once: Unified, real-time object detection." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 779–788. Las Vegas, NV: CVPR, 2016.

See Also

Apps

- Image Labeler | Ground Truth Labeler (Automated Driving Toolbox) | Video Labeler | Deep Network Designer (Deep Learning Toolbox)

Objects

yolov2ObjectDetector|yolov2TransformLayer|spaceToDepthLayer|depthConcatenationLayer(Deep Learning Toolbox)

Functions

trainYOLOv2ObjectDetector|analyzeNetwork(Deep Learning Toolbox)