Symbol Error Rate

Symbol error rate (SER) compares the number of errors in the recovered symbol pattern with the total number of symbols sent. It is measured at the recovered clock times using the model thresholds.

SER is calculated from an input stimulus symbol pattern and equalized waveforms. To calculate SER:

The equalized waveform is latched with the clock signal which converts to one voltage per UI (unit interval).

Model thresholds are applied to determine symbol level per UI.

The delay of the latched symbol data to the stimulus symbol pattern is calculated.

Delay and ignore bits are removed to align the stimulus and latched data symbol patterns. They are compared to each other to find the symbol errors.

Erroneous bits are counted to calculate the symbol error rate.

Once calculated, SER is reported as a metric in the time-domain simulation results. You can click the SER metric cell to open a plot of the stimulus versus recovered data symbols.

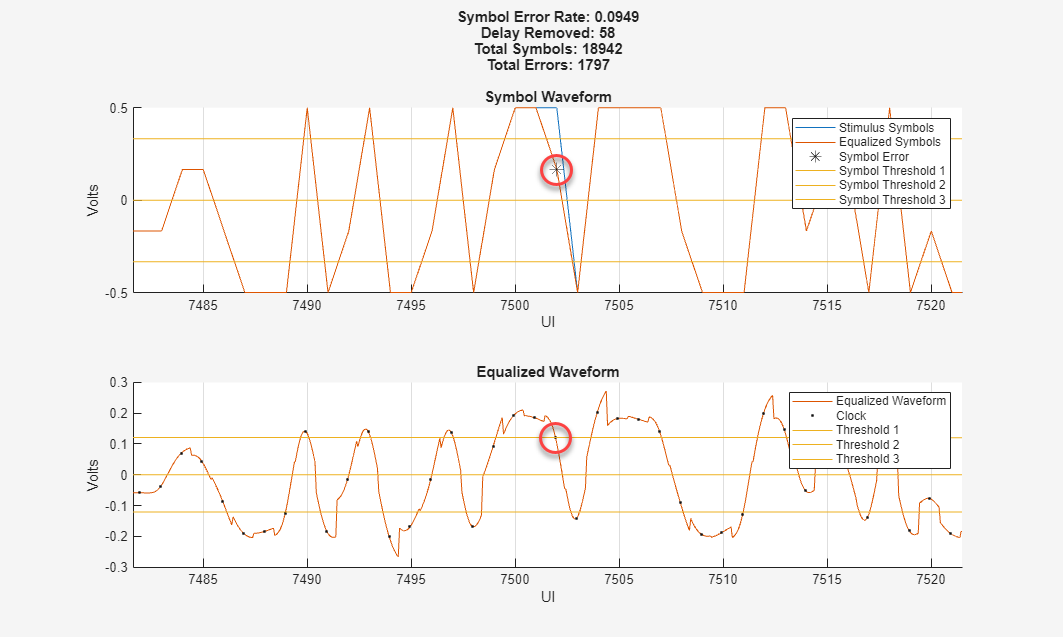

The errors are marked in the symbol waveform. You can cross reference the error at a symbol point with the equalized waveform to see the margin of error for that symbol. In this figure, the errors stop after ~7500 UI, which corresponds to the model thresholds stabilizing and the CDR locking in the equalized waveform plot. You can use this information to properly set the ignore time.

You can also zoom in on the individual errors and analyze why the error occurred. The circled error in the zoomed in plot shows that the clock time of the error occurs slightly late. This causes the recovered symbol to be recovered at the symbol 2 level where the stimulus is sent at the symbol 3 level. Sending the clock earlier or setting the threshold lower eliminates the error.

You can also observe if there is a pattern to the errors which can be caused by symbol mapping issues in your data recovery.

If the lock on CDR is lost, you can receive more symbols than transmitted. In this case, the additional symbols are truncated from the end of the symbol waveform. This loss of lock can originate due to signal jitters.