Generate CUDA ROS Node from Simulink

This example shows how to configure Simulink® Coder™ to generate and build a CUDA® ROS node from a Simulink model. You configure the model to simulate and generate CUDA code for ROS node. You then deploy the CUDA ROS node to local or remote device targets.

Prerequisites

Install and set up these MathWorks® products and support packages: Simulink Coder (required), Embedded Coder® (recommended), GPU Coder™, and GPU Coder Interface for Deep Learning (required for deep learning).

You can also install MATLAB® Coder™ Support Package for NVIDIA® Jetson™ and NVIDIA DRIVE™ Platforms (required for NVIDIA hardware connection).

Development computer with CUDA-enabled NVIDIA GPU.

Your target device can be a local host computer with a CUDA-enabled NVIDIA GPU, or a remote device such as an NVIDIA Jetson board.

To use GPU Coder for CUDA code generation, you must install NVIDIA graphics driver, CUDA toolkit, cuDNN library, and TensorRT library. For more information, see Installing Prerequisite Products (GPU Coder).

To set up the environment variables, see Setting Up the Prerequisite Products (GPU Coder).

To ensure you have the required third-party software, see ROS Toolbox System Requirements.

Verify GPU Environment for GPU Code Generation

To verify that your development computer has the drivers, tools, libraries, and configuration required for GPU code generation, enter these commands in the MATLAB Command Window:

gpuEnvObj = coder.gpuEnvConfig;

gpuEnvObj.BasicCodegen = 1;

gpuEnvObj.BasicCodeexec = 1;

gpuEnvObj.DeepLibTarget = "tensorrt";

gpuEnvObj.DeepCodeexec = 1;

gpuEnvObj.DeepCodegen = 1;

results = coder.checkGpuInstall(gpuEnvObj)

The output is representative. Your results might differ.

Compatible GPU : PASSED

CUDA Environment : PASSED

Runtime : PASSED

cuFFT : PASSED

cuSOLVER : PASSED

cuBLAS : PASSED

cuDNN Environment : PASSED

TensorRT Environment : PASSED

Basic Code Generation : PASSED

Basic Code Execution : PASSED

Deep Learning (TensorRT) Code Generation: PASSED

Deep Learning (TensorRT) Code Execution: PASSED

results =

struct with fields:

gpu: 1

cuda: 1

cudnn: 1

tensorrt: 1

basiccodegen: 1

basiccodeexec: 1

deepcodegen: 1

deepcodeexec: 1

tensorrtdatatype: 1

profiling: 0

Configure Simulink Model for Simulation and GPU Code Generation

Configure a model to generate CUDA ROS Node.

Open the Simulink model you want to configure for GPU code generation.

From the Simulation tab, in the Prepare section, expand the gallery and, under Configuration & Simulation, select Model Settings.

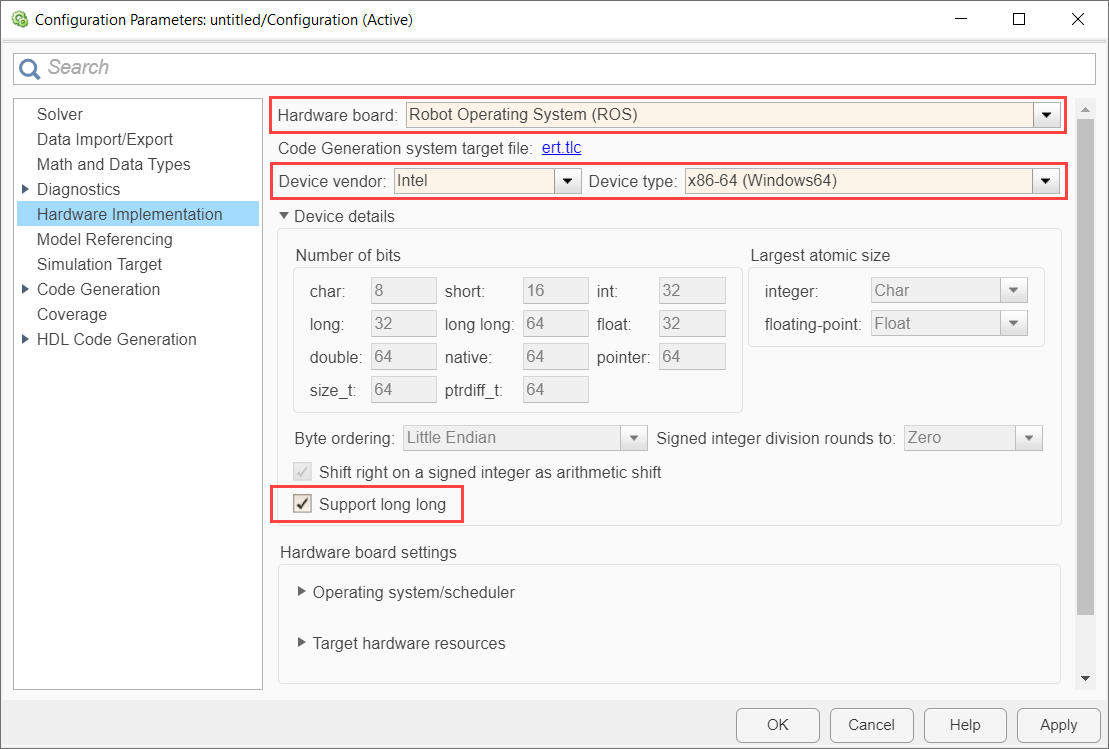

From the left pane of the Configuration Parameters dialog box, select the Hardware Implementation node. Set Hardware Board to

Robot Operating System (ROS)and specify the Device Vendor and Device Type for your hardware. Expand Device details and verify that Support long long is selected.

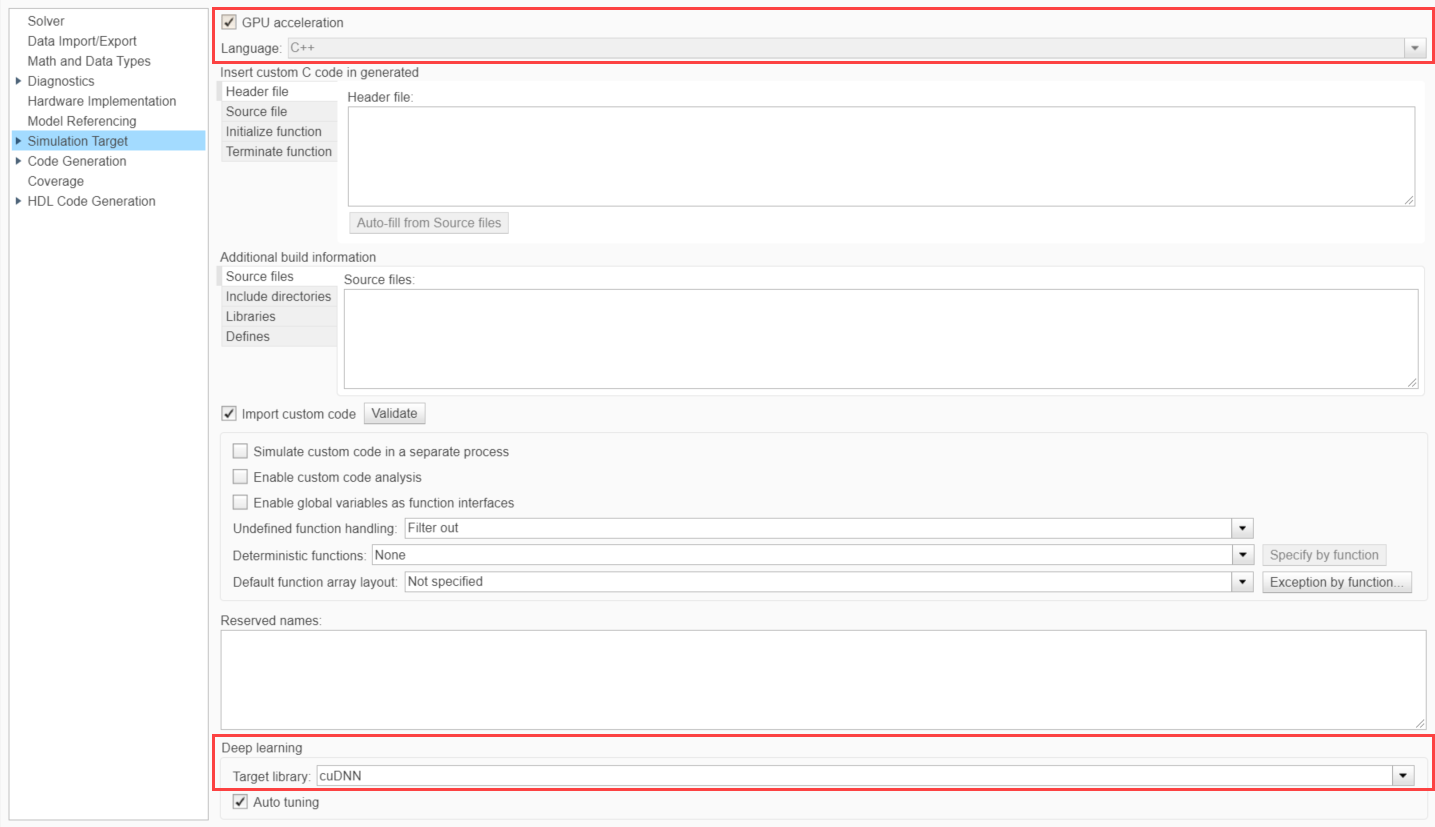

In the Simulation Target node, select GPU acceleration. If your model has deep learning blocks, then, under Deep Learning, select the appropriate Target library.

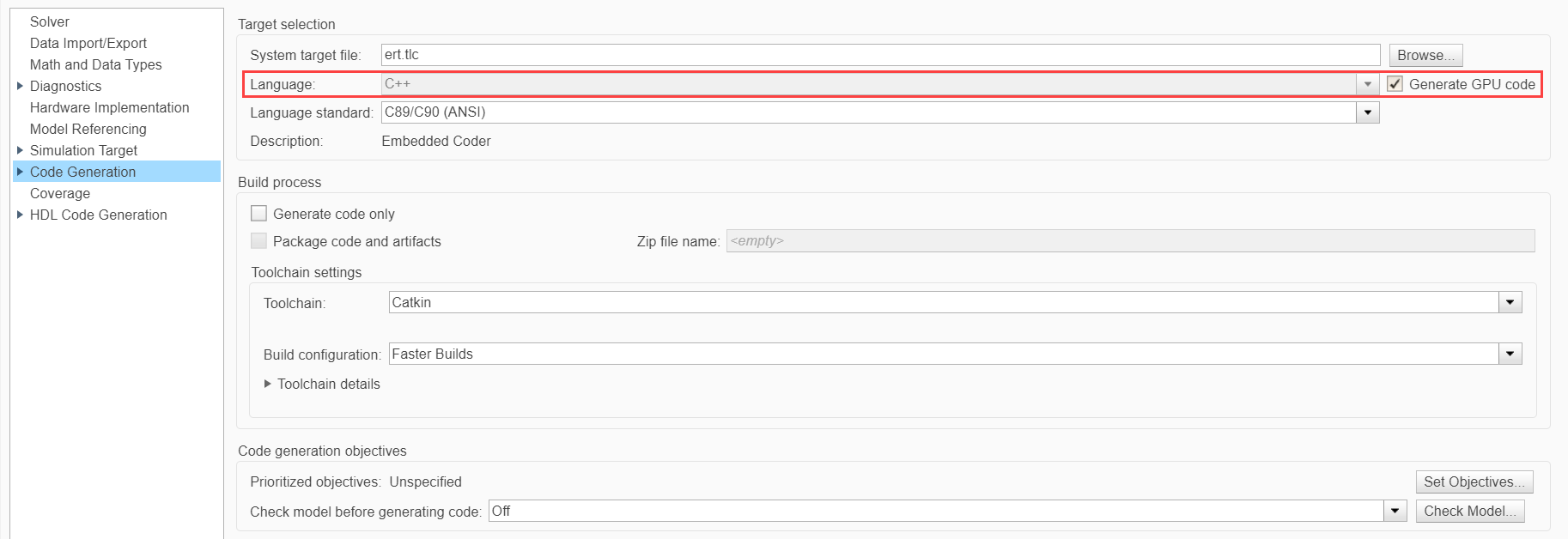

Select the Code Generation node. Under Target selection, set Language to

C++, and select Generate GPU code.

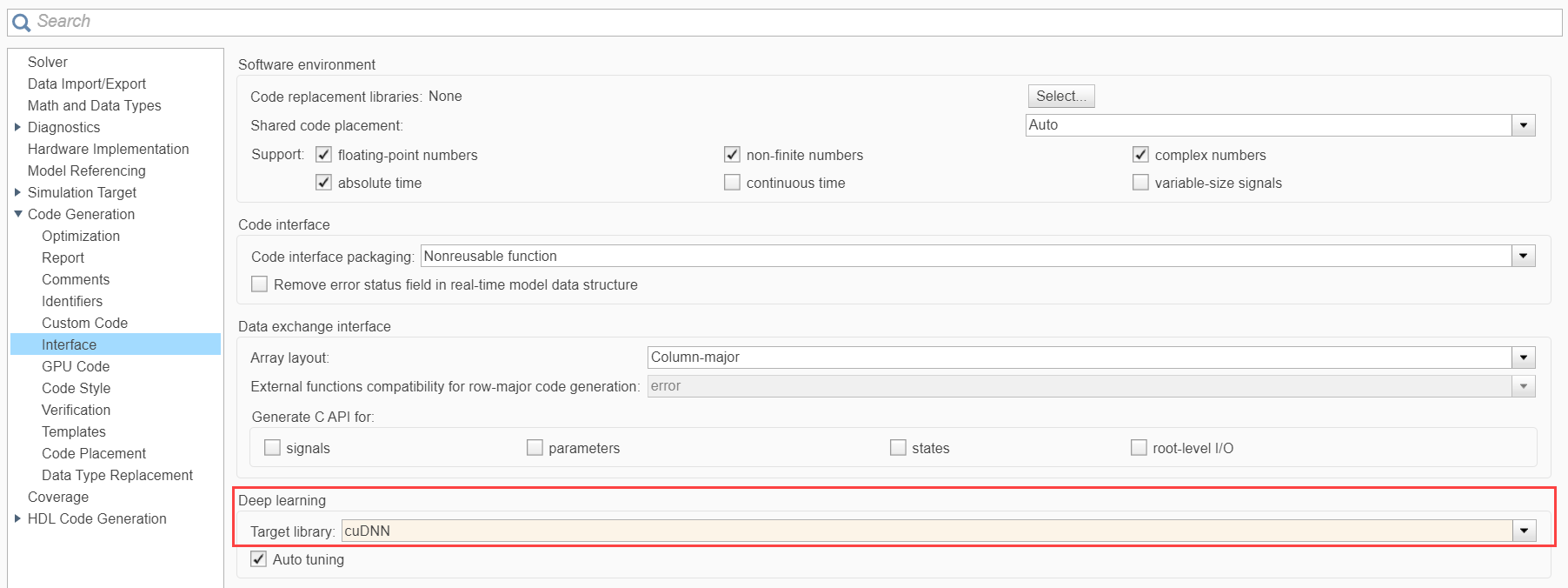

If your model has deep learning blocks, expand the Code Generation node and select the Interface node. Then, under in Deep Learning select the appropriate value for Target library.

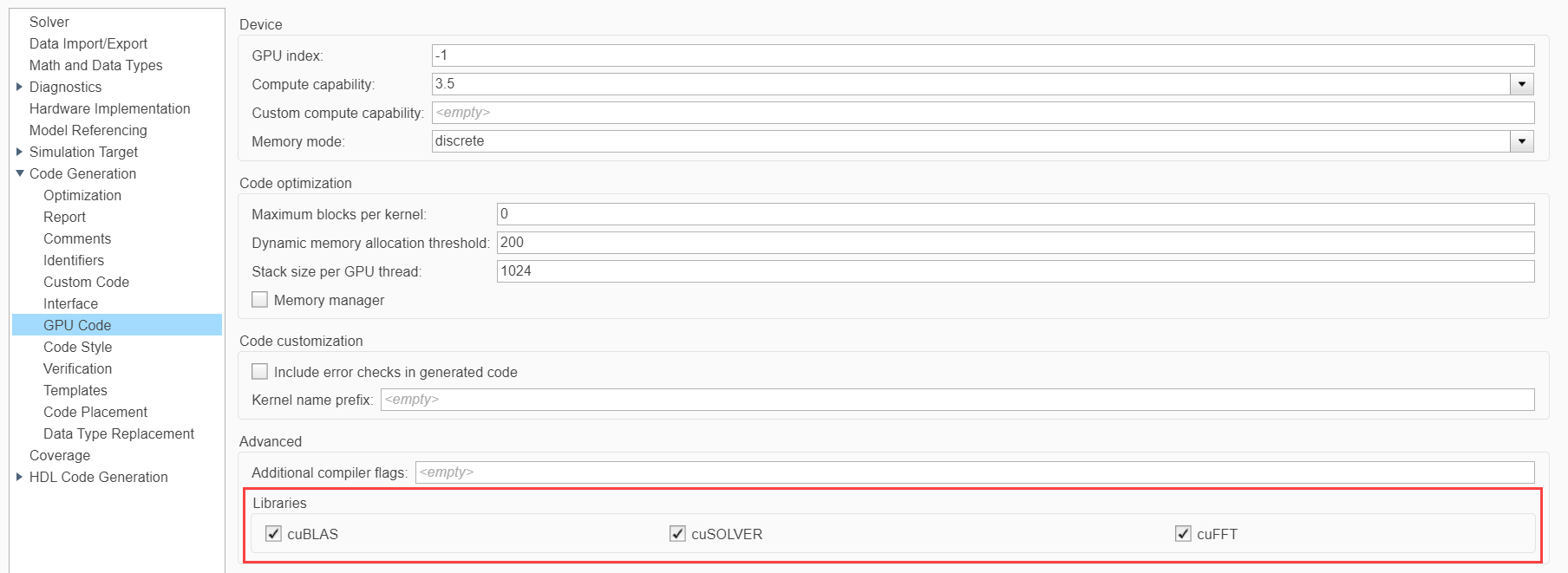

Select the GPU Code node and, under Libraries, enable cuBLAS, cuSOLVER, and cuFFT.

Code Generation and Deployment

To generate and deploy a CUDA ROS node to a ROS device, follow the steps in the Generate Standalone ROS Node from Simulink example.

Limitations

Model reference is not supported.

The build folder path cannot contain any spaces.