Identify Shapes Using Machine Learning on Arduino Nano 33 BLE Sense Hardware

This example shows how to use MATLAB® Support Package for Arduino® Hardware to identify shapes using a machine learning algorithm. This example uses the Arduino Nano 33 BLE Sense hardware board with an onboard lsm9ds1.

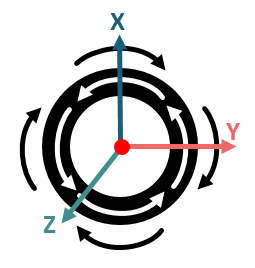

To draw the shapes, hold the Arduino board in your palm and move your hand in the air. The IMU sensor captures the linear acceleration and angular rate data along the X-, Y-, and Z-axes. Send this data to the machine learning algorithm which identifies the shape you have drawn and transmits the output over Bluetooth® to the Arduino board. The shape identified by the machine learning algorithm then displays in the MATLAB Command Window.

To access all the files for this example, click Open Live Script and download the attached files.

Prerequisites

For more information on how to use Arduino hardware with MATLAB, see Get Started with MATLAB Support Package for Arduino Hardware.

For more information on machine learning, see Get Started with Statistics and Machine Learning Toolbox (Statistics and Machine Learning Toolbox)

Required Hardware

Use an Arduino board with an onboard IMU sensor. This example uses Arduino Nano 33 BLE Sense board that has an onboard LSM9DS1 IMU sensor. This helps you to easily hold the hardware in your hand while you move your hand to draw shapes in the air. Alternatively, you can connect an IMU sensor to any Arduino board that has a sufficiently large memory. For more information on how to connect an IMU sensor to your Arduino board, refer to the sensor datasheet.

Either connect a Bluetooth dongle to the computer or use the computer's Bluetooth.

Hardware Setup

Connect the Arduino Nano 33 BLE Sense board to the host computer over Bluetooth. For more information, see Connection over Bluetooth.

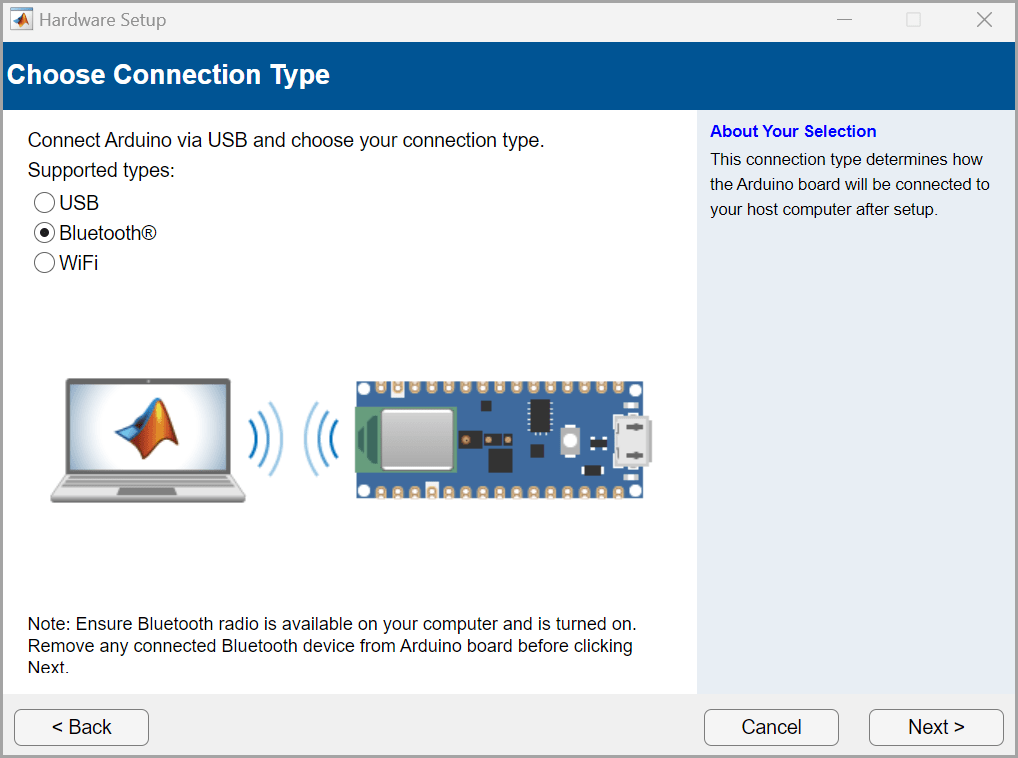

In the Choose Connection Type window under Hardware Setup, set the connection type to Bluetooth.

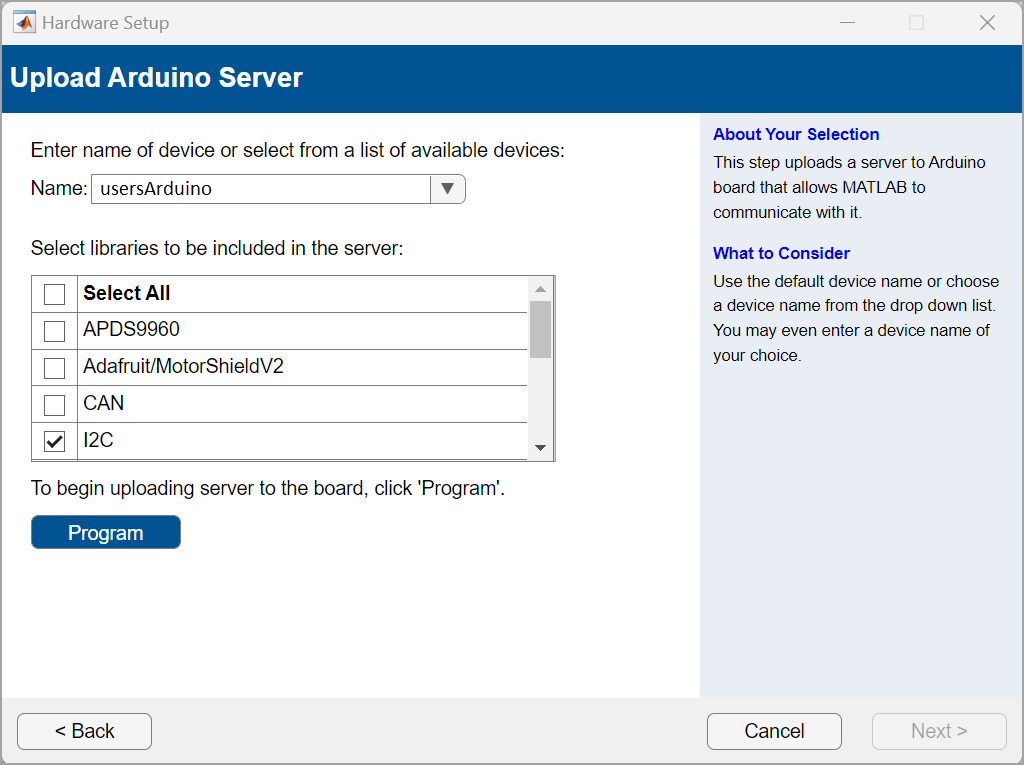

In the Upload Arduino Server window, select the I2C libraries.

Capture Data to Train Machine Learning Algorithm

You can either use the shapes_training_data.mat file that contains the data set for the circle and triangle shapes or capture the data and create the data set manually.

Import Training Data

To use the training data file included with this example, click Open Live Script and download the shapes_training_data.mat file. The shapes_training_data MAT file contains 119 data samples from the accelerometer and gyroscope on the IMU sensor. The 119 samples are grouped into 100 frames, with each frame representing a hand gesture. Each frame has six values from the X-, Y-, and Z-axes of the accelerometer and gyroscope, respectively. The data set contains a total of 11,900 observations for the circle and triangle shapes.

Load the shapes_training_data.mat file.

load shapes_training_dataCapture Data Manually

If you are using the shapes_training_data.mat file, skip this section. However, if you want to capture the training data for the machine learning algorithm manually, execute the capture_training_data.m file.

In the capture_training_data.m file, you can set the acceleration threshold in the accelerationThreshold parameter. For this example, set the threshold to 2.5. For more information on how to adjust the acceleration threshold for an IMU sensor, refer to the sensor datasheet.

%Acceleration threshold to detect motion

accelerationThreshold = 2.5;

To set up a connection with the Arduino board, create an arduino object and specify name of the board.

%Create arduino object a=arduino('usersArduino'); % Change this to your Bluetooth name or address

To read data from the LSM9DS1 IMU sensor, create an lsm9ds1 object and specify the number of samples to read in a single execution of the read function. For this example, set the number of samples to read to 119.

%Initialize imu sensor imu=lsm9ds1(a,'SamplesPerRead',119,'Bus',1);

Specify the number of frames to be captured per gesture in the while loop. For this example, set that value to 100.

while(gesture < 100) %Read acceleration data accel=imu.readAcceleration; %Sum up absolute values of acceleration aSum=sum(abs(accel))/9.8; %Capture values if there is significant motion if aSum>=accelerationThreshold gesture=gesture+1; %Read IMU sensor data imudata=imu.read; imudatatable=timetable2table(imudata); %Save values in data variable data{gesture}=[imudatatable.Acceleration/9.8 rad2deg(imudatatable.AngularVelocity)]; %Display the captured gesture number disp(['Gesture no.' num2str(gesture)]); end end

Hold the Arduino hardware in the palm of your hand and draw a circle in the air. If you want to create a data set of 100 frames for a circle, draw a circle 100 times in the air. Capture the data for the circle shape.

circledata = capture_training_data;

Repeat the procedure for the triangle shape. Capture the data for the triangle shape.

triangledata = capture_training_data;

Store the data in the shapes_training_data.mat file.

save('shapes_training_data',circledata,triangledata);

Extract Feature, Prepare, and Train Data

Extract

The gr_script_shapes.m file included with this example preprocesses the shapes_training_data.mat data set file, trains the machine learning algorithm with the data set, and evaluates whether the algorithm accurately predicts the circle and triangle shapes. The MATLAB function in this file performs a five-fold cross validation for the ensemble classifier and computes the validation accuracy of the algorithm. Execute the gr_script_shapes.m file.

run gr_script_shapes.mvalidationAccuracy = 0.9833

testAccuracy = 1

The code in the gr_script_shapes.m file extracts features by calculating the mean and the standard deviation of each column in a frame. Considering the data from the X-, Y-, and Z-axes for the accelerometer and the gyroscope and 100 frames per gesture, results in a 100-by-12 matrix of observations for each gesture.

Nframe = 100; for ind = 1:Nframe featureC1(ind,:) = mean(circledata{ind}); featureC2(ind,:) = std(circledata{ind}); featureT1(ind,:) = mean(triangledata{ind}); featureT2(ind,:) = std(triangledata{ind}); end X = [featureC1,featureC2; featureT1,featureT2; zeros(size(featureT1)),zeros(size(featureT2))]; % labels - 1: circle, 2: triangle, 3: idle Y = [ones(Nframe,1);2*ones(Nframe,1);3*ones(Nframe,1)];

Note: You can configure the number of frames to be captured per gesture in the while loop.

Prepare

This example uses 80% of the observations to train a model that classifies two types of shapes and 20% of the observations to validate the trained model. Use cvpartition (Statistics and Machine Learning Toolbox) to partition 20% of the data for the test data set.

rng('default') % For reproducibility Partition = cvpartition(Y,'Holdout',0.20); trainingInds = training(Partition); % Indices for the training set XTrain = X(trainingInds,:); YTrain = Y(trainingInds); testInds = test(Partition); % Indices for the test set

The training variables are visible in the Workspace pane in MATLAB.

Train Classification Model

Train the classification model using the ensemble classifier.

template = templateTree(... 'MaxNumSplits', 399); ensMdl = fitcensemble(... XTrain, ... YTrain, ... 'Method', 'Bag', ... 'NumLearningCycles', 20, ... 'Learners', template, ... 'ClassNames', [1; 2; 3]);

Validate Classifier

Perform five-fold cross validation for the ensemble classifier and compute the validation accuracy to evaluate the performance of test data.

%% Prediction accuracy % Perform five-fold cross-validation for classificationEnsemble and compute the validation accuracy. partitionedModel = crossval(ensMdl,'KFold',5); validationAccuracy = 1-kfoldLoss(partitionedModel) %% Test data accuracy % Evaluate performance of test data testAccuracy = 1-loss(ensMdl,XTest,YTest)

Predict Shape

Run this MATLAB code to predict the shape.

% Create an arduino object with Bluetooth connection aObj = arduino("usersArduino"); % Change this to required Bluetooth name or address % Create LSM9DS1 object imuObj = lsm9ds1(aObj,"Bus",1,"SamplesPerRead",119,'Bus',1); disp('Start capturing gesture data.');

Start capturing gesture data.

% Set the acceleration threshold accelerationThreshold = 2.5; % Predict the shapes for 10 seconds tic; while (toc<10) % Read the acceleration accel = readAcceleration(imuObj); %Sum up absolute values of acceleration aSum = sum(abs(accel))/9.8; %Capture values if there is significant motion if aSum >= accelerationThreshold %Read IMU sensor data imudata = read(imuObj); imudatatable = timetable2table(imudata); %Save values in data variable testGesture = [imudatatable.Acceleration/9.8 rad2deg(imudatatable.AngularVelocity)]; % Get the features of the captured gesture feature1 = mean(testGesture); feature2 = std(testGesture); features = [feature1 feature2]; % Predict the gesture y = predict(ensMdl,features); if y == 1 disp('Circle'); elseif y == 2 disp('Triangle'); end end end release(imuObj); clear imuObj aObj;